Our research

How should we navigate explosive AI progress?

Featured

Preparing for the Intelligence Explosion

William MacAskill & Fin Moorhouse

March 2025

AI that can accelerate research could drive a century of technological progress over just a few years. During such a period, new technological or political developments will raise consequential and hard-to-reverse decisions, in rapid succession. We call these developments grand challenges.

These challenges include new weapons of mass destruction, AI-enabled autocracies, races to grab offworld resources, and digital beings worthy of moral consideration, as well as opportunities to dramatically improve quality of life and collective decision-making.

We argue that these challenges cannot always be delegated to future AI systems, and suggest things we can do today to meaningfully improve our prospects. AGI preparedness is therefore not just about ensuring that advanced AI systems are aligned: we should be preparing, now, for the disorienting range of developments an intelligence explosion would bring.

AI-Enabled Coups: How a Small Group Could Use AI to Seize Power

Tom Davidson, Lukas Finnveden & Rose Hadshar

April 2025

The development of AI that is more broadly capable than humans will create a new and serious threat: AI-enabled coups. An AI-enabled coup could be staged by a very small group, or just a single person, and could occur even in established democracies. Sufficiently advanced AI will introduce three novel dynamics that significantly increase coup risk. Firstly, military and government leaders could fully replace human personnel with AI systems that are singularly loyal to them, eliminating the need to gain human supporters for a coup. Secondly, leaders of AI projects could deliberately build AI systems that are secretly loyal to them, for example fully autonomous military robots that pass security tests but later execute a coup when deployed in military settings. Thirdly, senior officials within AI projects or the government could gain exclusive access to superhuman capabilities in weapons development, strategic planning, persuasion, and cyber offense, and use these to increase their power until they can stage a coup. To address these risks, AI projects should design and enforce rules against AI misuse, audit systems for secret loyalties, and share frontier AI systems with multiple stakeholders. Governments should establish principles for government use of advanced AI, increase oversight of frontier AI projects, and procure AI for critical systems from multiple independent providers.

Better Futures

Series

William MacAskill

August 2025

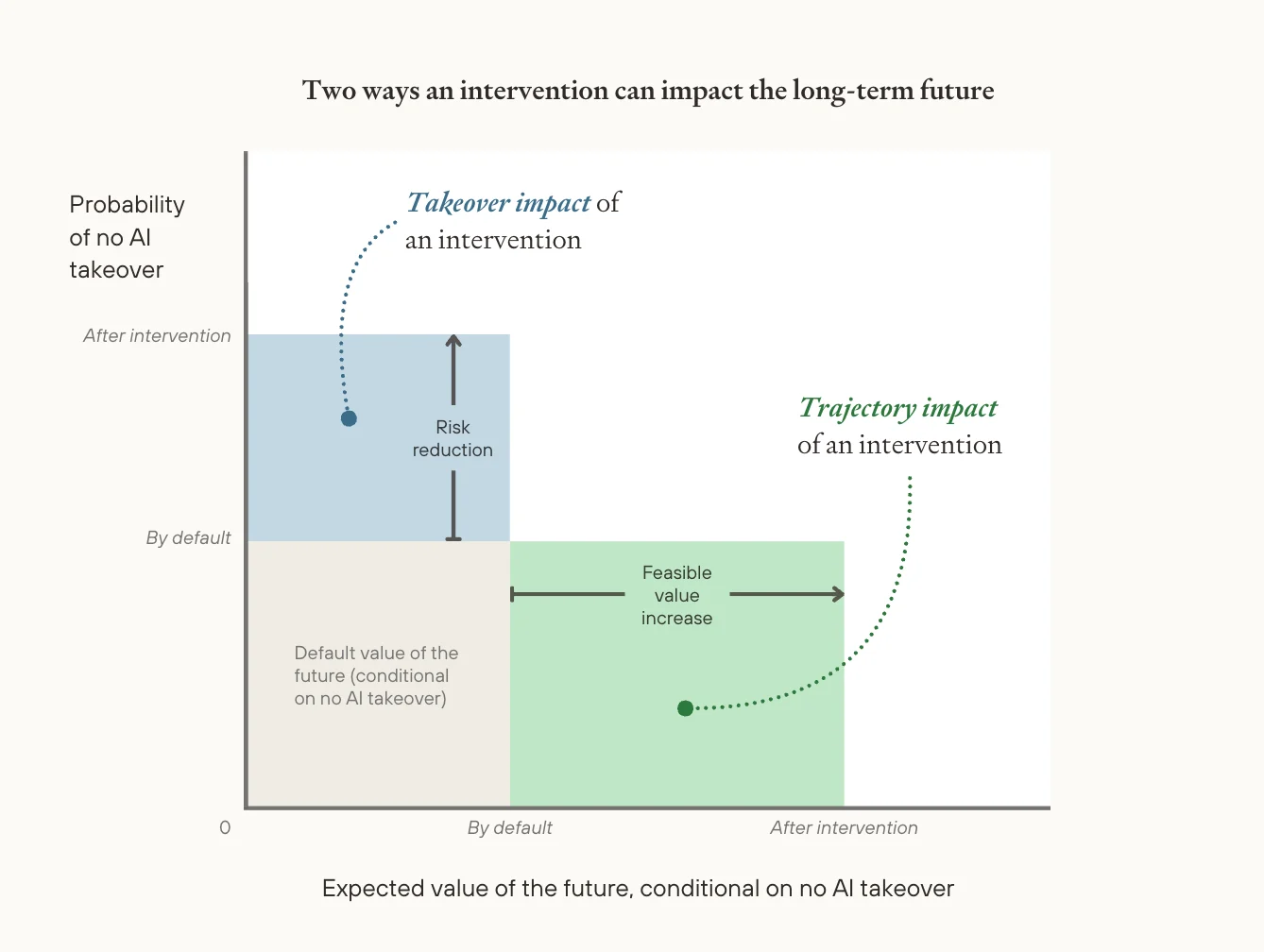

Suppose we want the future to go better. What should we do?

One approach is to avoid near-term catastrophes, like human extinction. This essay series explores a different, complementary, approach: improving on futures where we survive, to achieve a truly great future.

Will AI R&D Automation Cause a Software Intelligence Explosion?

Daniel Eth & Tom Davidson

March 2025

AI companies are increasingly using AI systems to accelerate AI research and development. Today’s AI systems help researchers write code, analyze research papers, and generate training data. Future systems could be significantly more capable – potentially automating the entire AI development cycle from formulating research questions and designing experiments to implementing, testing, and refining new AI systems. We argue that such systems could trigger a runaway feedback loop in which they quickly develop more advanced AI, which itself speeds up the development of even more advanced AI, resulting in extremely fast AI progress, even without the need for additional computer chips. Empirical evidence on the rate at which AI research efforts improve AI algorithms suggests that this positive feedback loop could overcome diminishing returns to continued AI research efforts. We evaluate two additional bottlenecks to rapid progress: training AI systems from scratch takes months, and improving AI algorithms often requires computationally expensive experiments. However, we find that there are possible workarounds that could enable a runaway feedback loop nonetheless.

AI Tools for Existential Security

Lizka Vaintrob & Owen Cotton-Barratt

March 2025

Humanity is not prepared for the AI-driven challenges we face. But the right AI tools could help us to anticipate and work together to meet these challenges — if they’re available in time. We can and should accelerate these tools.

Key applications include (1) epistemic tools, which improve human judgement; (2) coordination tools, which help diverse groups work identify and work towards shared goals; (3) risk-targeted tools to address specific challenges.

We can accelerate important tools by investing in task-relevant data, lowering adoption barriers, and securing compute for key R&D. While background AI progress limits potential gains, even small speedups could be decisive.

This is a priority area. There is lots to do already, and there will quickly be more. We should get started, and we should plan for a world with abundant cognition.

Stay up to date with our research

Stay up to speed with the latest research on preparing for transformative AI. Roughly weekly.

By subscribing you agree to Substack's terms of service. Unsubscribe anytime. Archives.

Research

Filter

(57)

Design sketches: tools for strategic awareness

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Part 3 of Design sketches for a more sensible world

Abstract

Near-term AI could be used to power technologies that give individuals and organizations a deeper strategic awareness of the world around them, helping them spot opportunities and avoid pitfalls as they make plans. We think improved strategic awareness could be especially important for empowering humanity to handle the challenges that advanced AI is likely to bring. Here we sketch three technologies that build towards this vision.

Authors

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Topic

Macrostrategy

Design sketches for a more sensible world

Series

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Abstract

We think that near-term AI systems could transform our ability to reason and coordinate, significantly improving our chances of safely navigating the transition to advanced AI systems. This sequence gives a series of design sketches for specific technologies that we think could help. We hope that these sketches make a more sensible world easier to envision, and inspire people to start building the relevant tech.

Authors

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Topic

Modelling AI progress

Design Sketches: Angels-on-the-Shoulder

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Part 2 of Design sketches for a more sensible world

Abstract

Near-term AI could allow us to build many technological analogues to ‘angels-on-the-shoulder’: highly customized tools that help people to better navigate their environments or handle tricky situations in ways they’ll feel good about later. These could mean more endorsed decisions, and fewer unforced errors. Here we sketch five technologies that build towards this vision.

Authors

Owen Cotton-Barratt, Lizka Vaintrob, Oly Sourbut & Rose Hadshar

Topic

Macrostrategy

The International AGI Project Series

Series

William MacAskill

Abstract

This is a series of papers and research notes on the idea that AGI should be developed as part of an international collaboration between governments. We aim to (i) assess how desirable an international AGI project is; (ii) assess what the best version of an international AGI project (taking feasibility into account) would look like.

Author

William MacAskill

Topic

International governance

What an international project to develop AGI should look like

William MacAskill

Part 1 of The international AGI project series

Abstract

What would the best version of an international project to develop AGI look like? In this research note, I set out my tentative best guess: “Intelsat for AGI”. This would be a US-led international project modelled on Intelsat (an international project that set up the first global communications satellite network), with broad benefit sharing for non-members. The primary case is that, within the domain of international AGI projects, this looks unusually feasible, and yet it would significantly reduce catastrophic risk compared to a US-only project.

Author

William MacAskill

Topic

International governance

AGI and World Government

William MacAskill & Rose Hadshar

Part 3 of The international AGI project series

Abstract

If there’s a large enough intelligence explosion, the first project to build AGI could organically become a de facto world government. In this note, we consider what implications this possibility has for AGI governance. We argue that this scenario makes it more desirable that AGI be developed by a multilateral coalition of democratic governments, under explicitly interim governance arrangements, and that non-participating countries receive major benefits and credible reassurances around their sovereignty.

Authors

William MacAskill & Rose Hadshar

Topics

International governance & Threat modelling

International AI projects and differential AI development

William MacAskill

Part 4 of The international AGI project series

Abstract

Proposals for an international AI project to manage risks from advanced AI generally require all frontier AI development to happen within that project, and with limitations. But some AI capabilities actively help with addressing risk. I argue that international projects should aim to limit only the most dangerous AI capabilities (in particular, AI R&D capabilities), while promoting helpful capabilities like forecasting and ethical deliberation. If this is technically feasible (which I’m uncertain about), it could increase our capacity to handle risk, reduce incentives to race, and help get industry on board with an international project.

Author

William MacAskill

Topics

International governance & Differential AI acceleration

A global convention to govern the intelligence explosion

William MacAskill

Part 5 of The international AGI project series

Abstract

There currently isn't a plan for how society should navigate an intelligence explosion. This research note proposes an international convention triggered when AI crosses defined capability thresholds. At that point, the US would pause frontier AI development for one month and convene other nations to draft treaties to govern the many challenges an intelligence explosion would throw up. While potentially feasible if agreements can be made quickly enough, it’s unclear if enforcement and technical details would work in practice.

Author

William MacAskill

Topic

International governance

An overview of some international organisations, with their voting structures

Rose Hadshar

Part 6 of The international AGI project series

Abstract

This rough research note gives an overview of some international organisations and their voting structures, as background for thinking about the international governance of AGI.

Author

Rose Hadshar

Topic

International governance

The UN Charter: a case study in international governance

Research note

Part 7 of The international AGI project series

Abstract

The transition to advanced AI systems may eventually lead to some kind of international agreement to govern AI. An important historical case study for an agreement of this kind is the founding of the United Nations. This research note gives an overview of the creation of the UN charter, before drawing some tentative conclusions for international AGI governance.

Topic

International governance

Short Timelines Aren't Obviously Higher-Leverage

William MacAskill & Mia Taylor

Abstract

Should we focus our efforts on worlds with short timelines to AGI? People often argue we should, because such worlds are higher-leverage than longer timelines worlds. We disagree. In this research note, we argue that it’s at least unclear that shorter timelines are higher-leverage, and, for many people, medium length timelines will be higher-leverage than short timelines.

Authors

William MacAskill & Mia Taylor

Topic

Macrostrategy

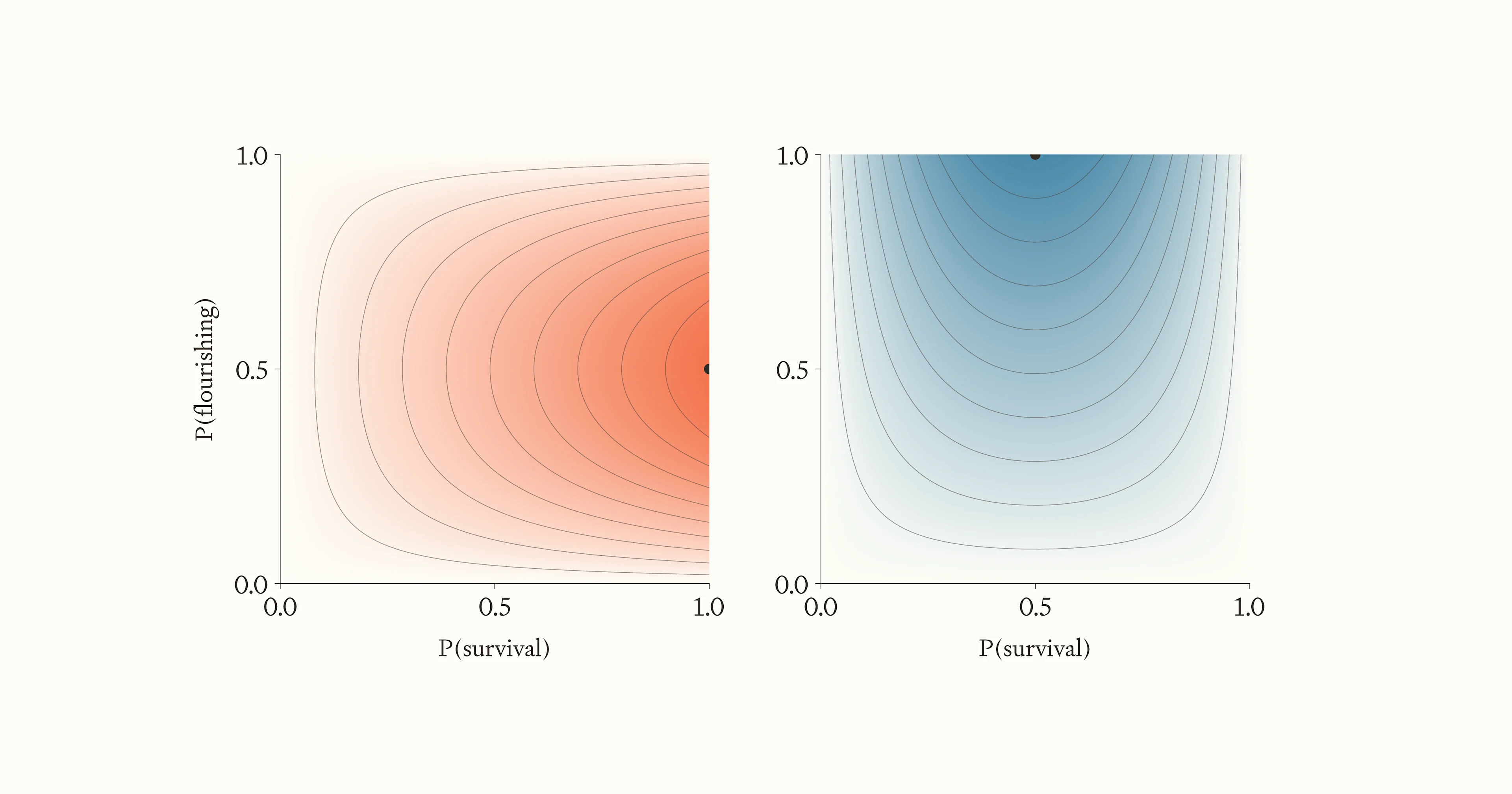

Is Flourishing Predetermined?

Fin Moorhouse & Carlo Leonardo Attubato

Abstract

Our overall credence in the chance that humanity flourishes might reflect some credence that flourishing is almost impossible, plus some credence that it’s very easy. If so, flourishing would seem overdetermined, and hence less tractable to work on than we thought. We consider how to formalise this argument.

Authors

Fin Moorhouse & Carlo Leonardo Attubato

Topic

Macrostrategy

Show all