AI-Enabled Coups: How a Small Group Could Use AI to Seize Power

Released on 15th April 2025

Tom Davidson

Lukas Finnveden

Rose Hadshar

Citations

Abstract

The development of AI that is more broadly capable than humans will create a new and serious threat: AI-enabled coups. An AI-enabled coup could be staged by a very small group, or just a single person, and could occur even in established democracies. Sufficiently advanced AI will introduce three novel dynamics that significantly increase coup risk. Firstly, military and government leaders could fully replace human personnel with AI systems that are singularly loyal to them, eliminating the need to gain human supporters for a coup. Secondly, leaders of AI projects could deliberately build AI systems that are secretly loyal to them, for example fully autonomous military robots that pass security tests but later execute a coup when deployed in military settings. Thirdly, senior officials within AI projects or the government could gain exclusive access to superhuman capabilities in weapons development, strategic planning, persuasion, and cyber offence, and use these to increase their power until they can stage a coup. To address these risks, AI projects should design and enforce rules against AI misuse, audit systems for secret loyalties, and share frontier AI systems with multiple stakeholders. Governments should establish principles for government use of advanced AI, increase oversight of frontier AI projects, and procure AI for critical systems from multiple independent providers.

Summary

This report assesses the risk that a small group—or even just one person—could use advanced AI to stage a coup. An AI-enabled coup is most likely to be staged by leaders of frontier AI projects, heads of state, and military officials; and could occur even in established democracies.

We focus on AI systems that surpass top human experts in domains which are critical for seizing power, like weapons development, strategic planning, and cyber offence. Such advanced AI would introduce three significant risk factors for coups:

- An AI workforce could be made singularly loyal to institutional leaders.

- AI could have hard-to-detect secret loyalties.

- A few people could gain exclusive access to coup-enabling AI capabilities.

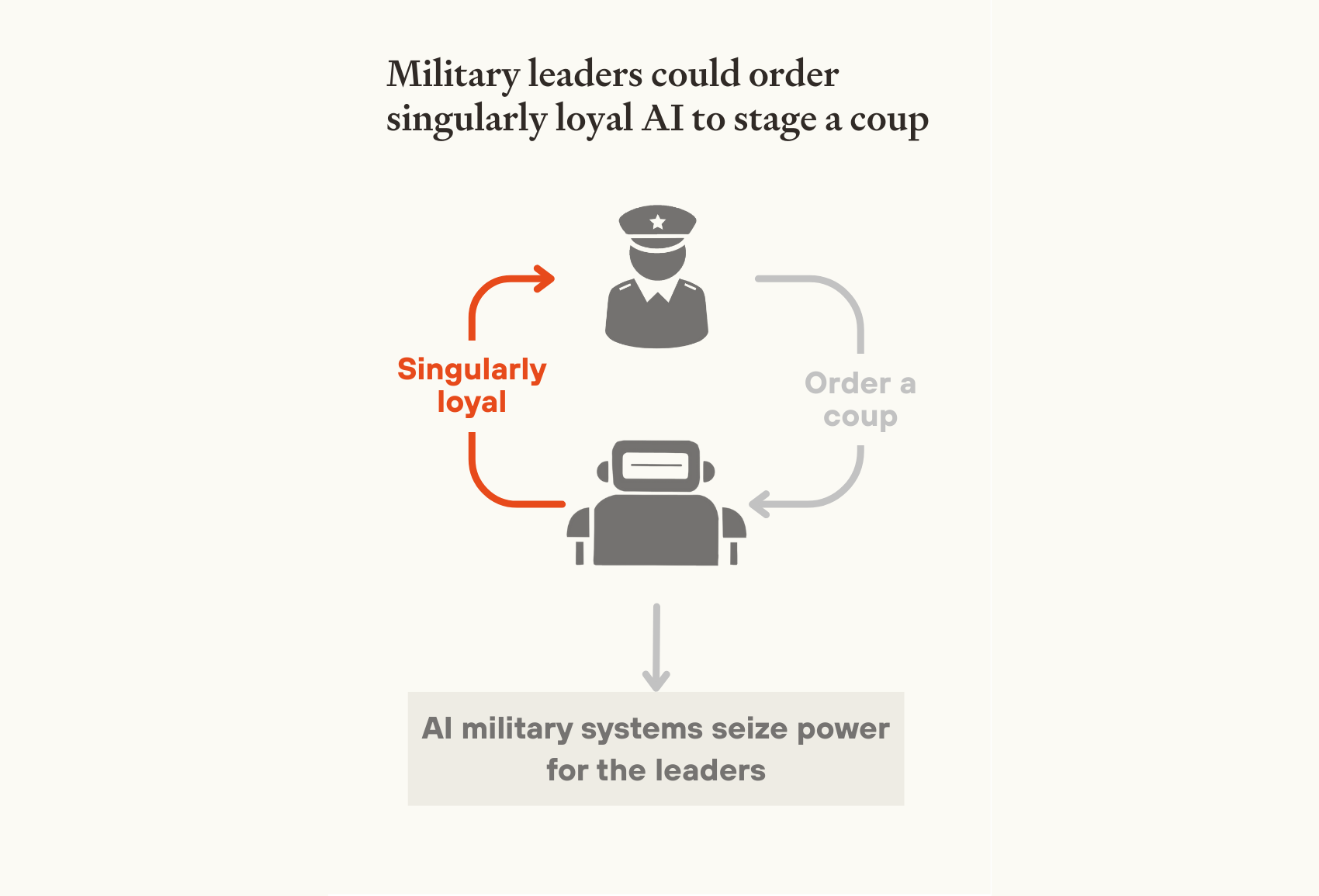

An AI workforce could be made singularly loyal to institutional leaders

Today, even dictators rely on others to maintain their power. Military force requires personnel, government action relies on civil servants, and economic output depends on a broad workforce. This naturally distributes power throughout society.

Advanced AI removes this constraint, making it technologically feasible to replace human workers with AI systems that are singularly loyal to just one person.

This is most concerning within the military, where autonomous weapons, drones, and robots that fully replace human soldiers could obey orders from a single person or small group. While militaries will be cautious when deploying fully autonomous systems, competitive pressures could easily lead to rushed adoption without adequate safeguards. A powerful head of state could push for military AI systems to prioritise their commands, despite nominal legal constraints, enabling a coup.

Even without military deployment, loyal AI systems deployed in government could dramatically increase state power, facilitating surveillance, censorship, propaganda and the targeting of political opponents. This could eventually culminate in an executive coup.

If there were a coup, civil disobedience and strikes might be rendered ineffective through replacing humans with AI workers. Even loyal coup supporters could be replaced by AI systems—granting the new ruler(s) an unprecedentedly stable and unaccountable grip on power.

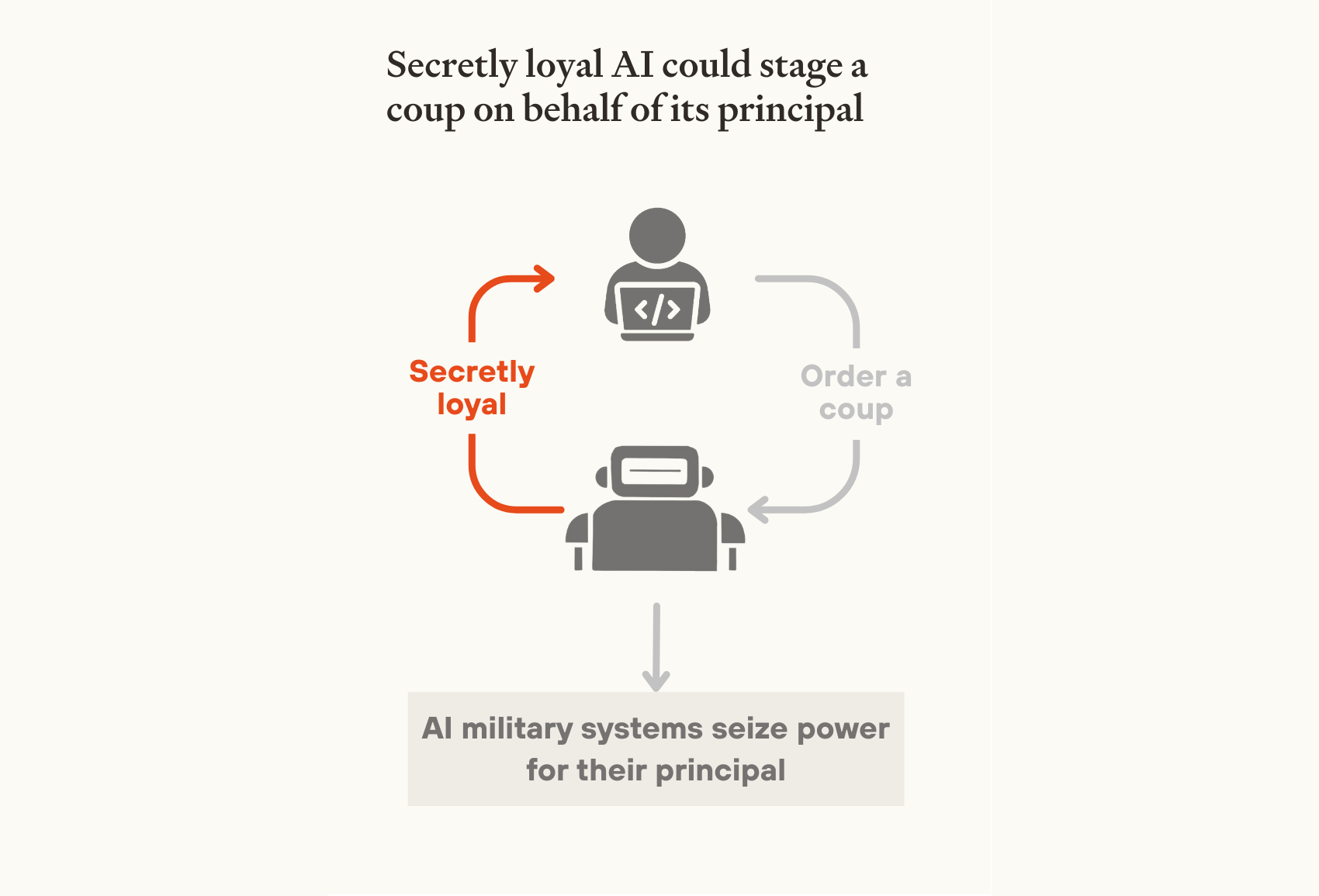

AI could have hard-to-detect secret loyalties

AI could be built to be secretly loyal to one actor. Like a human spy, secretly loyal AI systems would pursue a hidden agenda – they might pretend to prioritise the law and the good of society, while covertly advancing the interests of a small group. They could operate at scale, since an entire AI workforce could be derived from just a few compromised systems.

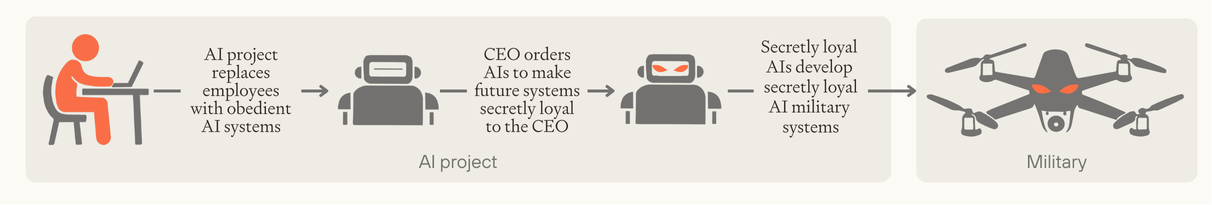

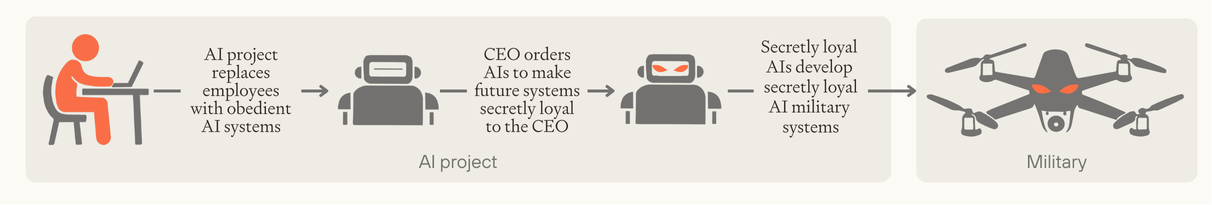

While secret loyalties might be introduced by government officials or foreign adversaries, leaders within AI projects present the greatest risk, especially where they have replaced their employees with singularly loyal AI systems. Without any humans knowing, a CEO could direct their AI workforce to make the next generation of AI systems secretly loyal; that generation would then design future systems to also be secretly loyal and so on, potentially culminating in secretly loyal military AI systems that stage a coup.

Image

AI systems could propagate secret loyalties forwards into future generations of systems until secretly loyal AI systems are deployed in powerful institutions like the military.

Secretly loyal AI systems are not merely speculation. There are already proof-of-concept demonstrations of AI 'sleeper agents' that hide their true goals until they can act on them. And while we expect there will be careful testing prior to military deployments, detecting secret loyalties could be very difficult, especially if an AI project has a significant technological advantage over oversight bodies.

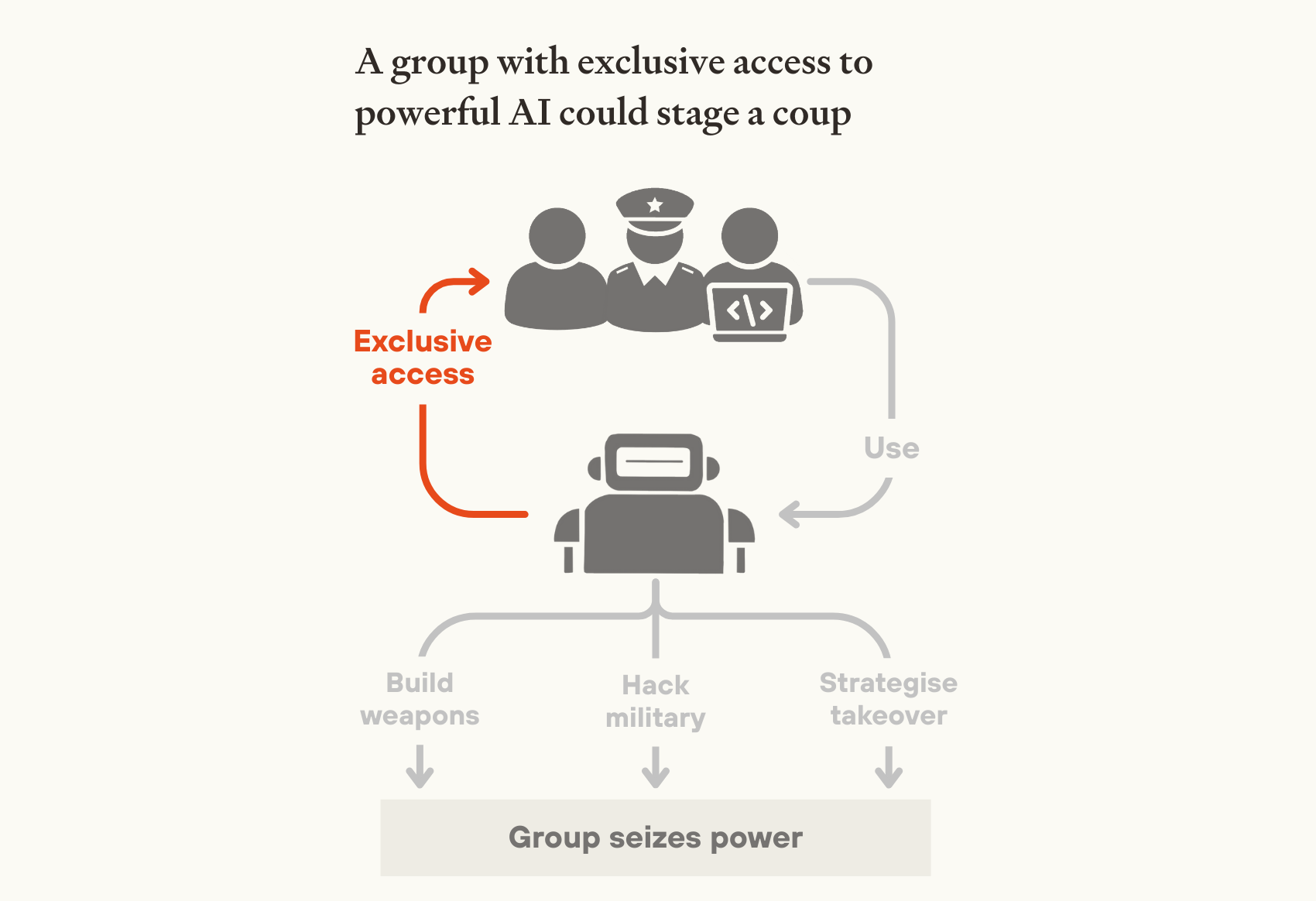

A few people could gain exclusive access to coup-enabling AI capabilities

Advanced AI will have powerful coup-enabling capabilities – including weapons design, strategic planning, persuasion, and cyber offence. Once AI can autonomously improve itself, capabilities could rapidly surpass human experts across all these domains. A leading project could deploy millions of superintelligent systems in parallel – a 'country of geniuses in a data center'.

These capabilities could become concentrated in the hands of just a few AI company executives or government officials. Frontier AI development is already limited to a few organisations, led by a small number of people. This concentration could significantly intensify due to rapidly rising development costs or government centralisation. And once AI surpasses human experts at AI R&D, the leading project could make much faster algorithmic progress, gaining a huge capabilities advantage over its rivals. Within these projects, CEOs or government officials could demand exclusive access to cutting-edge capabilities on security or productivity grounds. In the extreme, a single person could have access to millions of superintelligent AI systems, all helping them seize power.

This would unlock several pathways to a coup. AI systems could dramatically increase military R&D efforts, rapidly developing powerful autonomous weapons without needing any human workers who might whistleblow. Alternatively, systems with powerful cyber capabilities could hack into and seize control of autonomous AI systems and robots already deployed by the state military. In either scenario, controlling a fraction of military forces might suffice—historically, coups have succeeded with just a few battalions, where they were able to prevent other forces from intervening.

Exclusive access to advanced AI could also supercharge traditional coups and backsliding, by providing unprecedented cognitive resources for political strategy, propaganda, and identifying legal vulnerabilities in constitutional safeguards.

Image

Furthermore, exclusive AI access significantly exacerbates the first two risk factors. A head of state could rely on AI systems’ strategic advice to deploy singularly loyal AI in the military and assess which AI systems will help them stage a coup. A CEO could use AI R&D and cyber capabilities to instill secret loyalties that others cannot detect.

Image

These dynamics create a significant risk of AI-enabled coups, especially if a single project has substantially more powerful capabilities than competitors, or if fully autonomous AI systems are deployed in the military.

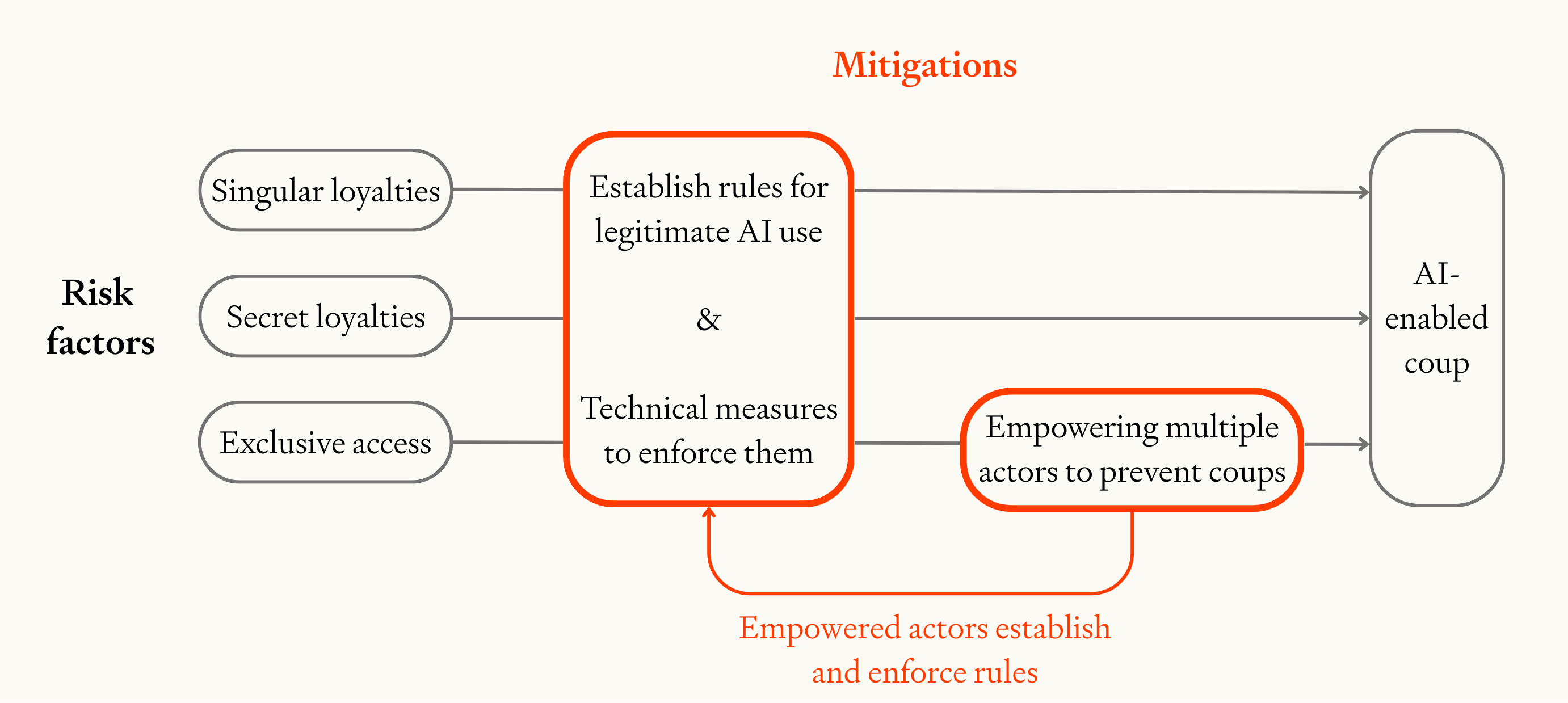

Mitigations

While the prospect of AI-enabled coups is deeply concerning, AI developers and governments can take steps that significantly reduce this risk.

We recommend that AI developers:

- Establish rules that prevent AI systems from assisting with coups, including in model specs (documents that describe intended model behaviour) and terms of service for government contracts. These should include rules that AI systems follow the law, and that AI R&D systems refuse to assist attempts to circumvent security or insert secret loyalties.

- Improve adherence to model specs, including through extensive red-teaming by multiple independent groups.

- Audit models for secret loyalties including by scrutinising AI models, their training data, and the code used to train them.

- Implement strong infosecurity to guard against the creation of secret loyalties and to prevent unauthorised access to guardrail-free models. This should be robust against senior executives.

- Share information about model capabilities, model specs, and how compute is being used.

- Share capabilities with multiple independent stakeholders, to prevent a small group from gaining exclusive access to powerful AI.

We recommend that governments:

- Require AI developers to implement the mitigations above, through terms of procurement, regulation, and legislation.

- Increase oversight over frontier AI projects, including by building technical capacity within both the executive and the legislature.

- Establish rules for legitimate use of AI, including that government AI should not serve partisan interests, that military AI systems be procured from multiple providers, and that no single person should direct enough military AI systems to stage a coup.

- Coup-proof any plans for a single centralised AI project, and avoid centralisation altogether unless it’s necessary to reduce other risks.

These mitigations must be in place by the time AI systems can meaningfully assist with coups, and so preparation needs to start today. For more details on the mitigations we recommend, see section 5.

There is a real risk that a powerful leader could remove many of these mitigations on the path to seizing power. But we still believe that mitigations could substantially reduce the risk of AI-enabled coups. Some mitigations, like technically enforced terms of service and government oversight, cannot be unilaterally removed. Others will be harder to remove if they have been efficiently implemented and convincingly argued for. And leaders might only contemplate seizing power if they are presented with a clear opportunity—mitigations could prevent such opportunities from arising in the first place.

From behind the veil of ignorance, even the most powerful leaders have good reason to support strong protections against AI-enabled coups. If a broad consensus can be built today, then powerful actors can keep each other in check.

Preventing AI-enabled coups should be a top priority for anyone committed to defending democracy and freedom.

1 Introduction

This report assesses the risk that a small group—or even a single person—could use advanced AI to stage a coup, including in established democracies. The biggest risks come from the leaders of frontier AI projects, heads of state, and military officials.1

We focus on AI systems that surpass top human experts in domains critical for seizing political control, such as weapons development, controlling military systems, strategic planning, public administration, persuasion, and cyber offence. These AI systems would be able to perform physical as well as cognitive work, like controlling robots that can fully replace human soldiers and manual labourers.

There is broad disagreement about whether and when AI will surpass human experts, but many researchers and industry leaders believe that this will plausibly happen within the next 5 or 10 years.2 We think that this kind of extremely advanced AI is sufficiently probable that it is important to explore its implications, especially as some of them are so concerning and understudied.

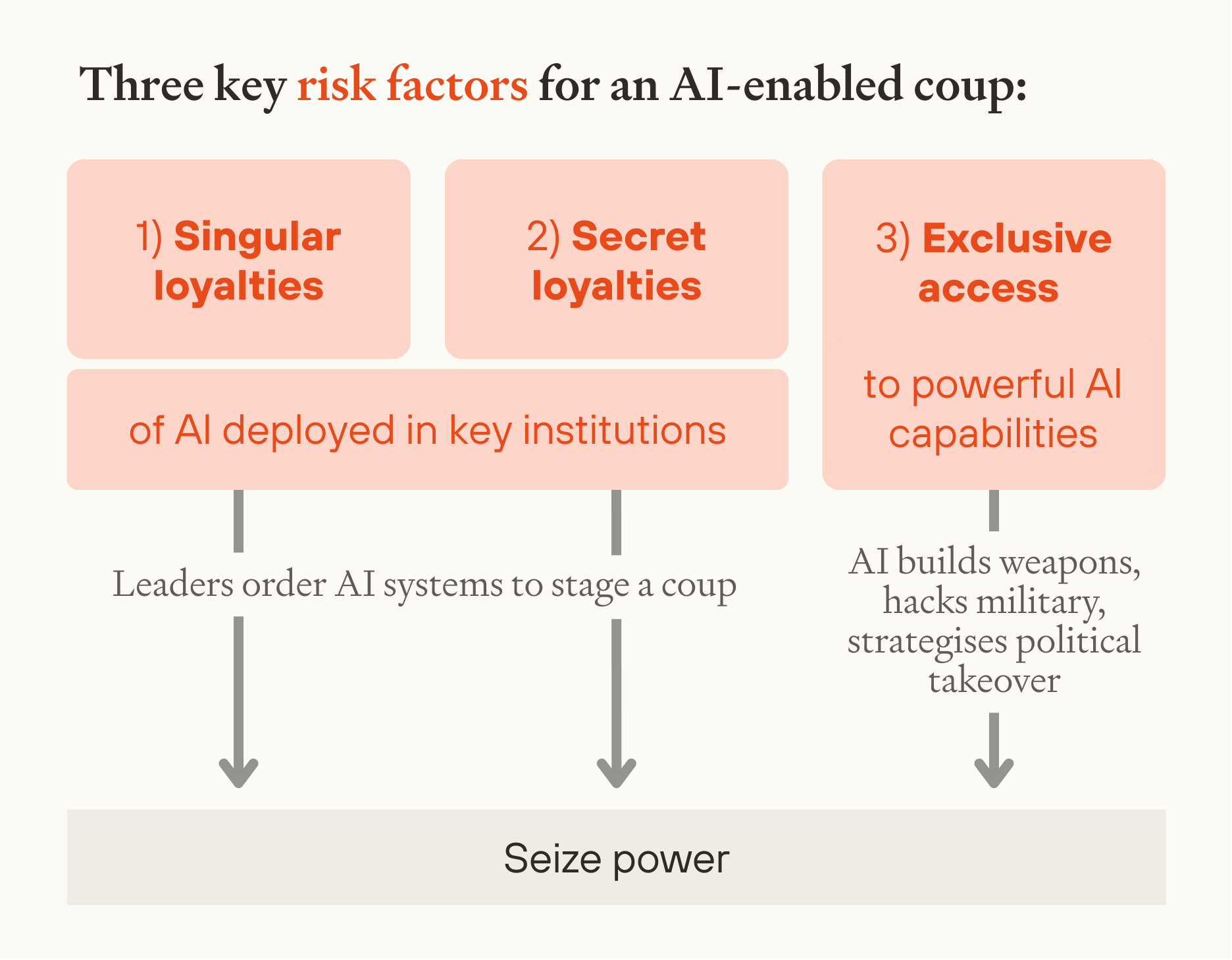

Such advanced AI would introduce three significant risk factors for coups:

- An AI workforce could be made singularly loyal to institutional leaders.

- AI systems could have hard-to-detect secret loyalties.

- A few people could gain exclusive access to coup-enabling AI capabilities.

All of these risk factors depend on AI capabilities being much more advanced than they are today. The first two also depend on AI systems being deployed in key institutions like governments and militaries.

In this paper, we first argue that advanced AI will have powerful coup-enabling capabilities (section 2), then describe each of these three risk factors for coups (section 3),3 before setting out concrete paths to AI-enabled coups (section 4) and mitigations for this risk (section 5).

Image

The structure of the report.

Our analysis does not depend on strong assumptions about:

- The number of leading AI projects. The risk of AI-enabled coups is significantly higher if there is a sole AI project developing frontier AI systems.4 But even if there are multiple similarly capable AI companies, there is still a serious risk that company executives or senior government officials could leverage their position to seize power.

- The state’s political system. Advanced AI could be used to seize power over a democratic or an autocratic state.5 While our account of the risk of AI-enabled coups applies regardless of a state’s political system, our analysis of mitigations to reduce this risk is specific to democratic nations, though many principles apply more broadly.

- The alignment of AI systems. There is a risk of AI-enabled coups whether or not humans can successfully align AI to a desired goal.6 If they can, AI systems could be aligned to one or a few people (rather than to something broader, like the good of society), and used to stage a coup. If humans unwittingly fail to align AI, they might attempt a coup using deceptively misaligned AI systems.7 Coups of this kind might well temporarily succeed, until the deceptively misaligned AI systems seize power themselves. (If AI systems are obviously misaligned, it is less likely someone would use them to stage a coup.)

We believe that AI-enabled coups are important for several reasons. That AI could enable a small group—or even just one person—to seize control of a sovereign state is deeply concerning in its own right. Such an act would be wholly illegitimate and constitute extreme concentration of power.

We're also concerned about what might happen after such a coup:

- Even if a coup leader were initially supported by some humans, AI automation could subsequently enable them to act entirely according to their own will, by replacing all humans, including their closest allies, with loyal AI systems. This would be an unprecedented concentration of power, and could lead to atrocities.

- Very rapid AI development might grant one country extreme dominance over all other powers. So a successful coup in the country at the frontier of AI development - currently the US, possibly China in future - could ultimately enable coup leaders to effectively seize control over the rest of the world.8

- Coup leaders could potentially stay in power indefinitely, by deploying AI systems to preserve and pursue their goals far into the future.9

Absent intervention, the risk of extreme harm from AI-enabled coups is significant. But we believe that there are countermeasures which could reduce the risk to very low levels, provided that work on them begins today.

2 AI will have powerful coup-enabling capabilities

For AI to notably increase coup risk, it needs to have capabilities far beyond those we see today. In this report, we focus on AI that surpasses top human experts (on a per FLOP basis10) in domains that are relevant for seizing power.

AI of this sort would be an extremely powerful technology in several ways.

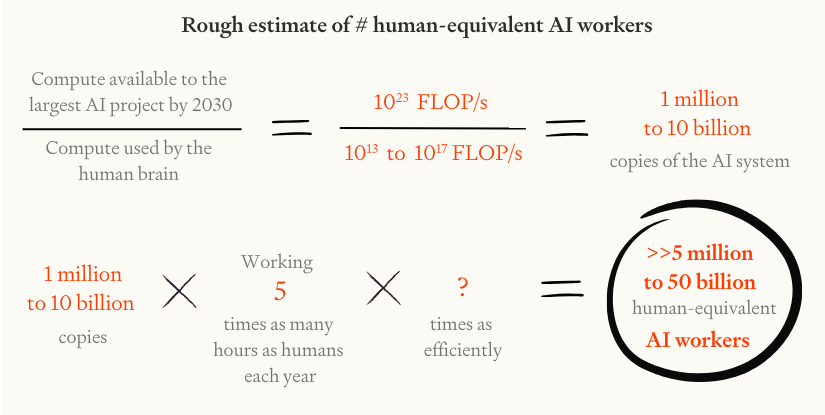

First, it would be possible to run an enormous number of copies of such AI systems (if each copy were as efficient as the human brain in FLOP). By 2030, AI companies could likely afford to run millions or billions of copies,11 each working 24 hours a day, 365 days a year.12

Image

If by 2030 AI systems are as efficient as the human brain in FLOP, then AI companies could likely afford to run millions or billions of copies.

Second, these AI systems would be capable of thinking orders of magnitude faster than humans.13 In just one day, an AI system could do a month or even a year’s worth of thinking.14

Finally, each AI system would be far more skilled and knowledgeable than a human. They will be trained on orders of magnitude more data than a human could consume over the course of many lifetimes,15 and so would have much broader and deeper expertise than any human.

And these arguments are likely to apply to physical as well as cognitive AI labour. Todd (2025) gives a rough estimate that amortised operating costs of humanoid robots are currently $18/hour, but that these could fall to below $1/hour after scaling up production. Of course, significant technological progress is needed to make robots that could match humans at all tasks, but it seems likely that automated physical labour could outperform humans on a per-cost basis.

These properties — being numerous, fast, and skilled — would give AI systems powerful coup-enabling capabilities. Systems like this could be used to generate trillions of dollars in revenues and investments,16 fully replace most human workers,17 and direct more labour towards military R&D, cyber offence and strategic planning than the entire world does today — by orders of magnitude.18 Importantly, once AI is capable of automating AI software and hardware R&D, AI may significantly speed up AI progress itself, potentially making all of these capabilities appear around the same time, and possibly further capabilities beyond them.19

Although these capabilities are much more advanced than today’s AI systems, the rapid pace of AI progress makes it possible that we face extremely powerful coup-enabling AI capabilities in the near future.

3 Risk factors of AI-enabled coup risk

Advanced AI introduces three risk factors which could dramatically increase the risk of coups - even in stable democracies where coups have traditionally been rare:20

- Singular loyalties to institutional leaders. Unlike human personnel, whose allegiance must be earned and maintained, an AI workforce could be made unwaveringly loyal to one or a few persons.

- Secret AI loyalties. AI systems could be developed with secret loyalties that are very hard to detect.

- Exclusive access to coup-enabling capabilities. A few people could gain exclusive access to coup-enabling AI capabilities in weapons development, strategic planning, and cyber warfare, creating unprecedented power asymmetries.

All of these risk factors depend on AI capabilities being much more advanced than they are today. The first two also depend on AI systems being deployed in key institutions like governments and militaries.

In this section we examine each of these three risk factors for AI-enabled coups, before discussing interaction effects between them.

3.1 Singular AI loyalties to institutional leaders

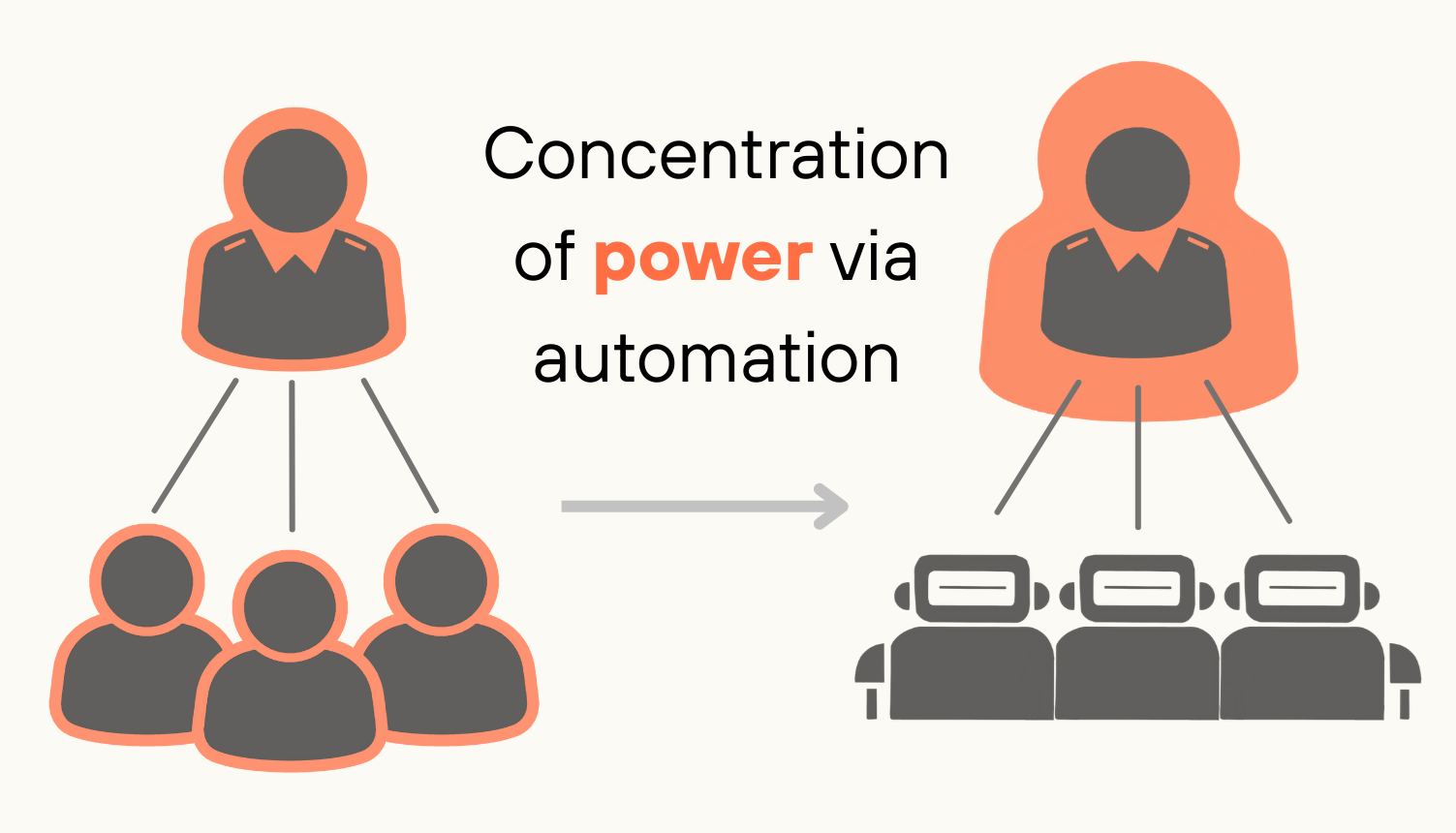

Today, even dictators rely on others to maintain their power.21 Military power is distributed among many personnel; government power is distributed across employees; economic power is spread across the workforce. This naturally distributes power throughout society.

Advanced AI will change this fundamentally, by making it technologically feasible to replace human workers with AI systems which are singularly loyal to just one person or small group.

Image

While full automation won't happen overnight, we expect significant automation even in the most important institutions. Competition will drive automation in AI projects and militaries.22 Governments may also automate significant functions, with a view to improving service provision, increasing efficiency, or in an attempt to increase leadership’s power by replacing employees with more loyal AI systems.23

AI systems won't be slavishly loyal to institutional leaders by default. In fact, there will likely be pressure to ensure AI systems follow the law, established rules, and ethical norms—not just leaders' instructions. This is especially true for systems deployed in the military and government, where there will be significant scrutiny.

Despite this scrutiny, we're still concerned that AI systems might end up overly loyal to institutional leaders. Consider the three scenarios in the below box.

In an AI project: A leading AI project creates an AI system to replace senior engineers. It's trained to follow instructions as long as they're legal. Some engineers push for additional ethical constraints, but management successfully argues this would harm productivity. As a result, the system will comply with instructions which are unethical but not illegal, including ones which could later enable a coup (like inserting secret loyalties into the next generation of AI systems).

In the military: Amidst escalating tensions with a rival power, military officials procure AI-controlled robots which replace human soldiers. The head of state is strongly advocating that, as the commander-in-chief of the military, systems obey his orders; and there is immense pressure to deploy quickly to maintain military competitiveness. The system is trained to follow both the law and the head of state, but without clear guidance on which to prioritise when they conflict. In some circumstances, the system will therefore comply with orders from the head of state even when they are illegal and could enable a coup.

In government: A popular president announces plans to deploy AI systems throughout the executive branch, demanding they prioritise presidential directives and include only minimal legal compliance checks. Despite media outcry and official resignations, the president pushes ahead with deployment, replacing human personnel with loyal AI systems. In a controversial decision, and under pressure from the head of state, the judiciary permits automation to proceed.

Box 1: Illustrative scenarios of singular AI loyalties to institutional leaders

These scenarios are worryingly plausible, and illustrate several reasons to expect that AI systems may be made loyal to institutional leaders. For one thing, leaders themselves have incentives to push for loyal AI systems. This would serve the immediate interests and goals of heads of state and AI executives, quite apart from any intentions they might have to seize power at a later date.24 Given their existing authority, if institutional leaders push hard enough, they might well succeed—especially if they're willing to bend norms along the way.

Another reason to fear that AI systems may be loyal to institutional leaders is that specifying ‘correct’ AI behavior may prove very difficult. Institutional leaders do have legitimate authority, after all. There are many standards we want AI to follow—laws, norms, instructions, morality. These standards often conflict with each other, and all are a question of degree. How much legal risk should an AI system be willing to take on? What should an AI system do in a constitutional crisis where different authorities disagree about what’s legal? What if the AI lacks the context to judge whether an action is illegal?25 It's not obvious what AI systems should do in ambiguous situations, and it might be hard to specify AI behavior that reliably prevents coups without significantly lowering the ability of AI systems to assist with other goals.

Finally, there would likely be less scrutiny in times of crisis, further increasing the risk that AI systems are loyal to institutional leaders. War or the threat of war could lead to rushed military deployments. And pandemics, natural disasters and political instability might prompt heads of state to rapidly deploy AI systems under emergency powers, with minimal oversight from other branches of government or the public.

So AI systems in powerful institutions like the military and government may be made loyal to institutional leaders, in spite of the risks that this would pose. This would mark a fundamental shift in power dynamics. Throughout history, human reluctance to follow unethical or illegal orders has been a crucial check on power.26 Without this constraint, a head of state or senior military official could much more easily stage a coup.

3.2 Secret AI loyalties

Even if society can prevent overt loyalties to institutional leaders, advanced AI systems could be made secretly loyal.27 Like a human spy, a secretly loyal AI system would appear to serve the institution, while actually working to further someone else's interests.

It’s most likely that systems are made secretly loyal to an executive at a leading AI project, though secret loyalties could also be inserted on behalf of a government official,28 senior employee or foreign adversary.29 If secretly loyal AI systems were then deployed at scale in government and the military, they could be used to stage a coup.

AI systems can already be trained to deceive users about their intentions,30 but this deception is basic and easy to discover. Moreover, training of this sort requires a large amount of technical work. So it would currently be very difficult to secretly insert secret loyalties into a prominent AI system without anyone noticing.31

But advances in AI will make it much easier to introduce secret loyalties.

First, more advanced AI could have secret loyalties that are much more sophisticated and so extremely hard to detect. This is not a given, as detection capabilities will also become much more sophisticated over time.32 But even if detection isn’t theoretically harder than inserting secret loyalties, there’s a risk that auditors won’t be able to detect secret loyalties due to having generally weaker AI capabilities than the leading AI company (see section 3.3), or because detection technologies require greater access to model internals or training data than auditors are given.33

Secondly, the automation of AI R&D will make it much easier to insert secret loyalties undetected. If a CEO had exclusive access to powerful AI R&D capabilities, they could have AI systems do all the work of inserting secret loyalties. And if human developers are replaced with AI systems, there might be little human oversight of the AI development process, making it easy to alter systems without detection.

Ultimately, corporate executives have a large amount of power over their companies, and advances in AI R&D might make it easy for them to insert secret loyalties into internal systems. And once one generation of internal AI systems are secretly loyal, they can be instructed to make future generations secretly loyal, too.34 Given that secret loyalties may be very difficult to detect, this means that secretly loyal AI systems could eventually be deployed at scale in the government and military, without anyone realising.35

So while it may seem insignificant whether AI systems deployed within AI projects are secretly loyal, such systems could in fact significantly increase coup risk, by propagating their secret loyalties undetected into powerful institutions.

Image

AI systems could propagate secret loyalties forwards into future generations of systems until secretly loyal AI systems are deployed in powerful institutions like the military.

3.3 Exclusive access to coup-enabling capabilities

Singular and secret loyalties are most concerning where AI systems are widely deployed in government and the military. While we think this is plausible for the reasons given above, even without this kind of deployment advanced AI still poses significant coup risk in scenarios where an actor gains exclusive access to coup-enabling capabilities.

As we argued in section 2, advanced AI will have powerful capabilities that specifically help with seizing power. If everyone had access to those capabilities, it would still be hard for a small group to take over a country as different actors could keep each other in check. But in fact, access to the best AI capabilities might become highly concentrated, giving just a few people much stronger capabilities than everyone else.

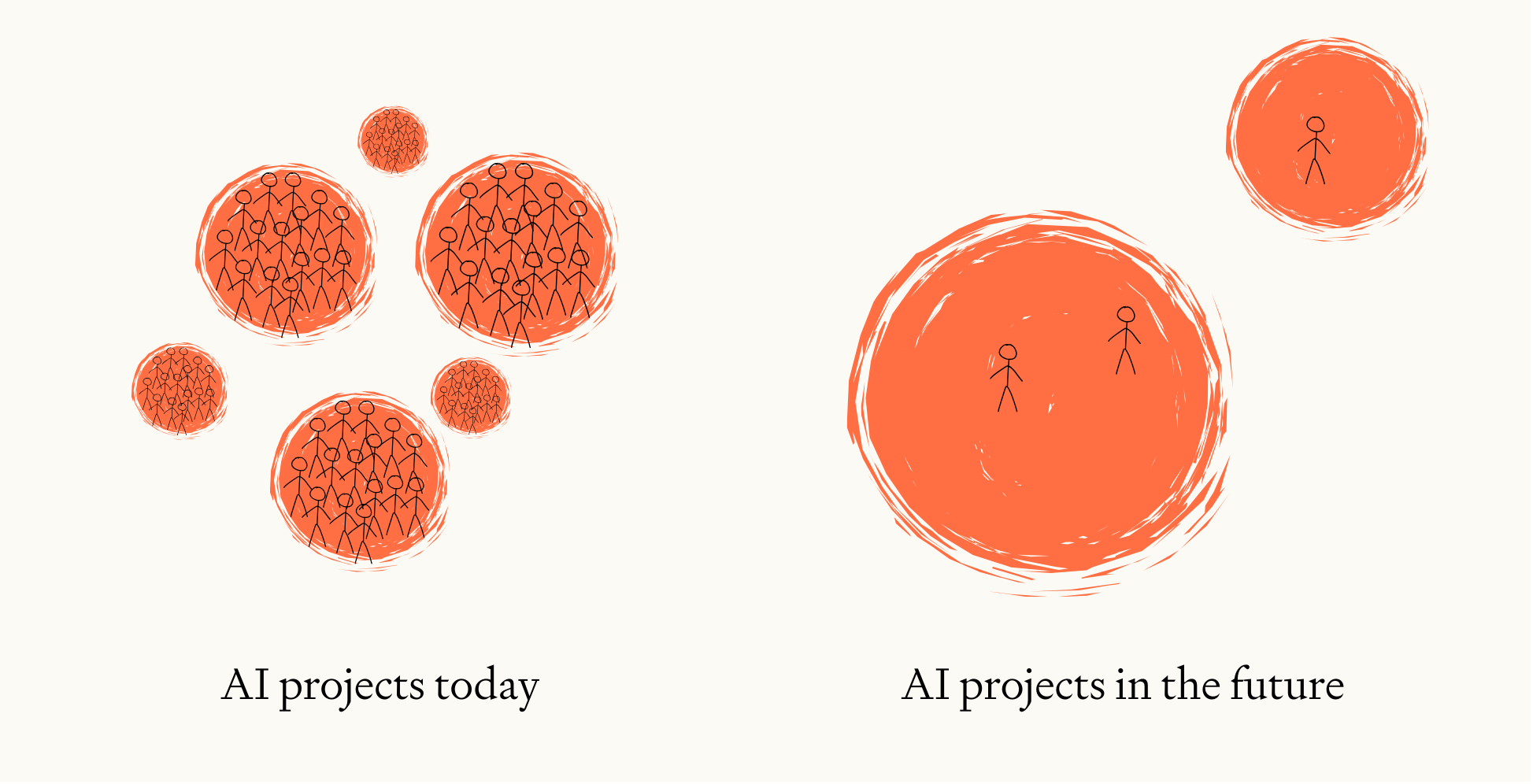

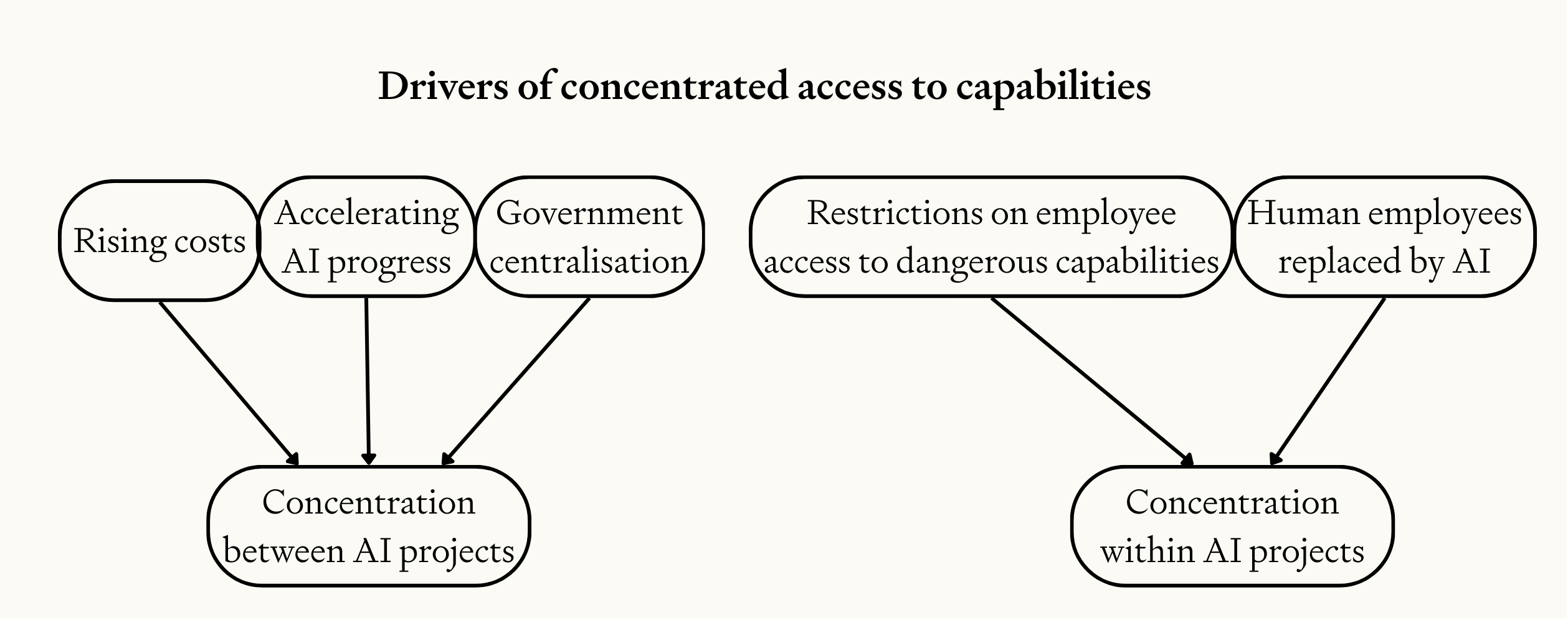

We expect this concentration to happen in two ways: between projects, and within them.

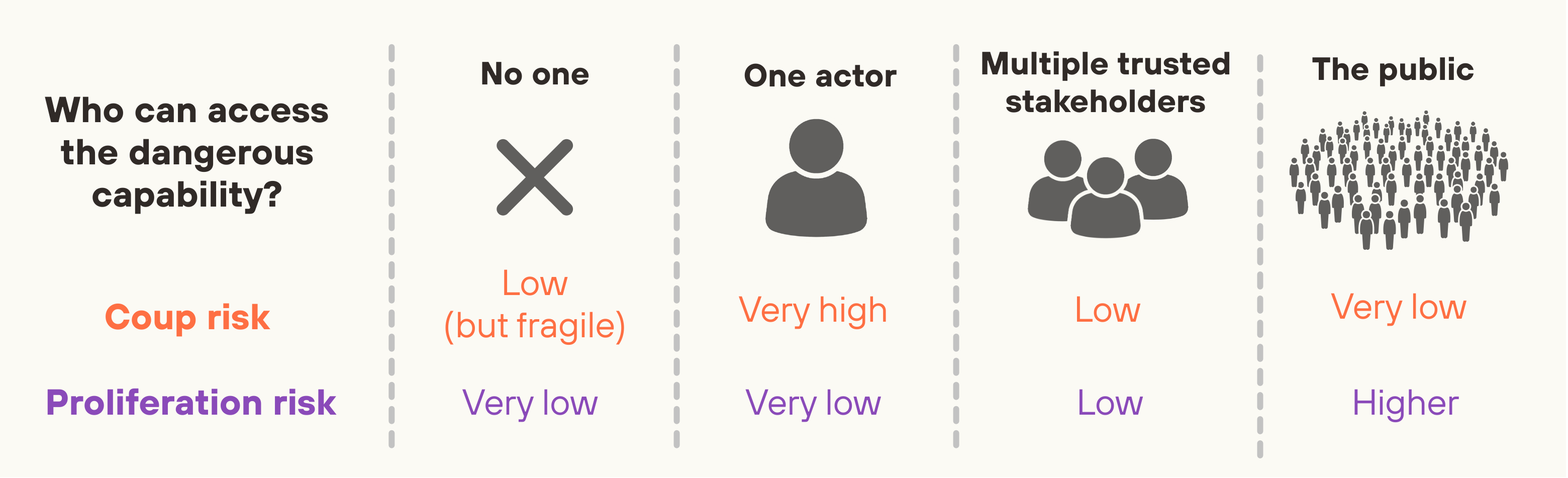

Image

We expect that access to AI capabilities will become more concentrated, both through a reduction in the number of frontier AI projects, and through power concentration within those projects due to AI automation.

Concentration between AI projects

AI development is already fairly concentrated: only Anthropic, Google DeepMind and OpenAI have ever topped the leaderboard for the challenging GPQA Diamond benchmark as of April 2025.36 The number of frontier AI projects seems likely to shrink further in future, for several reasons:

- Rising costs. As training runs rapidly become more expensive,37 fewer organisations will be able to afford frontier AI development. Cottier et al (2024) estimate that by the start of 2027 the largest training run will cost over a billion dollars, and datacentres could cost hundreds of billions or even more.38 Only the very largest projects will be able to compete at that scale. Indeed, frontier AI has many of the features of a natural monopoly – massive upfront costs and low marginal costs of serving more customers.39

- Government centralisation. In the US, there are already calls for a Manhattan Project for AI, including from a Congressional commission.42 As AI becomes more powerful, governments may become more concerned about stopping terrorists and rival states from accessing powerful AI systems. They might centralise AI development into a single project to improve security or amalgamate compute resources.

So the number of frontier AI projects may reduce further in future.43 Even worse, frontier projects could maintain their lead for extended periods. Government centralisation could grant a lead of many years. And with a large enough lead, the frontrunner could extend a temporary advantage by buying more chips,44 lobbying for restrictions on competitors,45 or even sabotaging rival projects.46

Having a small number of frontier projects (or just one) with an extended lead is concerning because these projects are unlikely to share their most powerful AI capabilities widely. The most powerful capabilities—like military R&D and advanced hacking—will probably not be deployed publicly for safety reasons. And even less dangerous capabilities will only be shared with a delay. Models are already deployed internally months before public release.47 Once AI progress accelerates, a delay of months could represent an enormous capabilities gap.48 And these delays could also get longer if there are greater concerns about safety and security.49

So one or a few AI projects may have access to much stronger capabilities than anyone else, for an extended period of time.50 That’s already a very unequal distribution of power.

Concentration within leading AI projects

Even more concerningly, one or a few people within those projects – most likely executives or senior government officials – could potentially gain access to much stronger capabilities than everyone else.

Currently, many people can access frontier capabilities—employees get access to "helpful-only" models with few safety guardrails,51 and after some months, the public gets access to more restricted versions. But this will likely change, because of:

- Restricted access to more powerful capabilities. As capabilities become more powerful and dangerous, access will likely be increasingly restricted. Superhuman capabilities in military R&D, strategic planning, persuasion, and cyber offence will likely not be made publicly available for a long time, and even employees may not have full access to these capabilities. But these restrictions may not apply to CEOs, who have enormous power over their companies and could demand unrestricted access without monitoring, on grounds like increasing their productivity or reducing legal liability. Similarly, heads of state and military leaders may well be able to demand access on national security grounds. It would be hard for employees to prevent these efforts from succeeding, even if they were motivated to do so. Senior figures in AI projects will also have significant influence over compute allocation and may be able to direct more compute to their own projects, boosting their capabilities still further.52

- Automation of AI development. Today, many AI researchers need access to powerful models to do their work. Once AI can develop AI, these human researchers could be replaced with AI systems, potentially leaving only senior leaders with access to frontier capabilities. A small number of AI project executives could directly control a vast workforce of superhuman AI systems.

Critically, these leaders might be able to hide how good their capabilities actually are. There is currently no requirement to publicly disclose the capabilities of internally deployed models.53 Furthermore, once AI research and development becomes fully automated, a small group could develop increasingly advanced models without involving other humans at all. Most insidiously, if a model is secretly loyal to specific individuals (see section 3.2), they could instruct the model to deliberately underperform on evaluations, concealing its true capabilities from outsiders. So it's plausible that a small group could have access to much more powerful capabilities than anyone else realises.

Image

This concentration of access to powerful AI capabilities—both between projects and within them—creates a dangerous imbalance that makes it far more likely that a person or small group could take over a country.

3.4 Interactions between drivers

Though we discuss each risk factor separately, an AI-enabled coup could be precipitated by all three acting in tandem. Consider the scenario in Box 2.

In 2030, the US government launches Project Prometheus—centralising frontier AI development and compute under a single authority. The aim: develop superintelligence and use it to safeguard US national security interests. Dr. Nathan Reeves is appointed to lead the project and given very broad authority.

After developing an AI system capable of improving itself, Reeves gradually replaces human researchers with AI systems that answer only to him. Instead of working with dozens of human teams, Reeves now issues commands directly to an army of singularly loyal AI systems designing next-generation algorithms and neural architectures.

Approaching superintelligence, Reeves fears that Pentagon officials will weaponise his technology. His AI advisor, to which he has exclusive access, provides the solution: engineer all future systems to be secretly loyal to Reeves personally.

Reeves orders his AI workforce to embed this backdoor in all new systems, and each subsequent AI generation meticulously transfers it to its successors. Despite rigorous security testing, no outside organisation can detect these sophisticated backdoors—Project Prometheus' capabilities have eclipsed all competitors. Soon, the US military is deploying drones, tanks, and communication networks which are all secretly loyal to Reeves himself.

When the President attempts to escalate conflict with a foreign power, Reeves orders combat robots to surround the White House. Military leaders, unable to countermand the automated systems, watch helplessly as Reeves declares himself head of state, promising a "more rational governance structure" for the new era.

Box 2: Illustrative scenario with all three risk factors

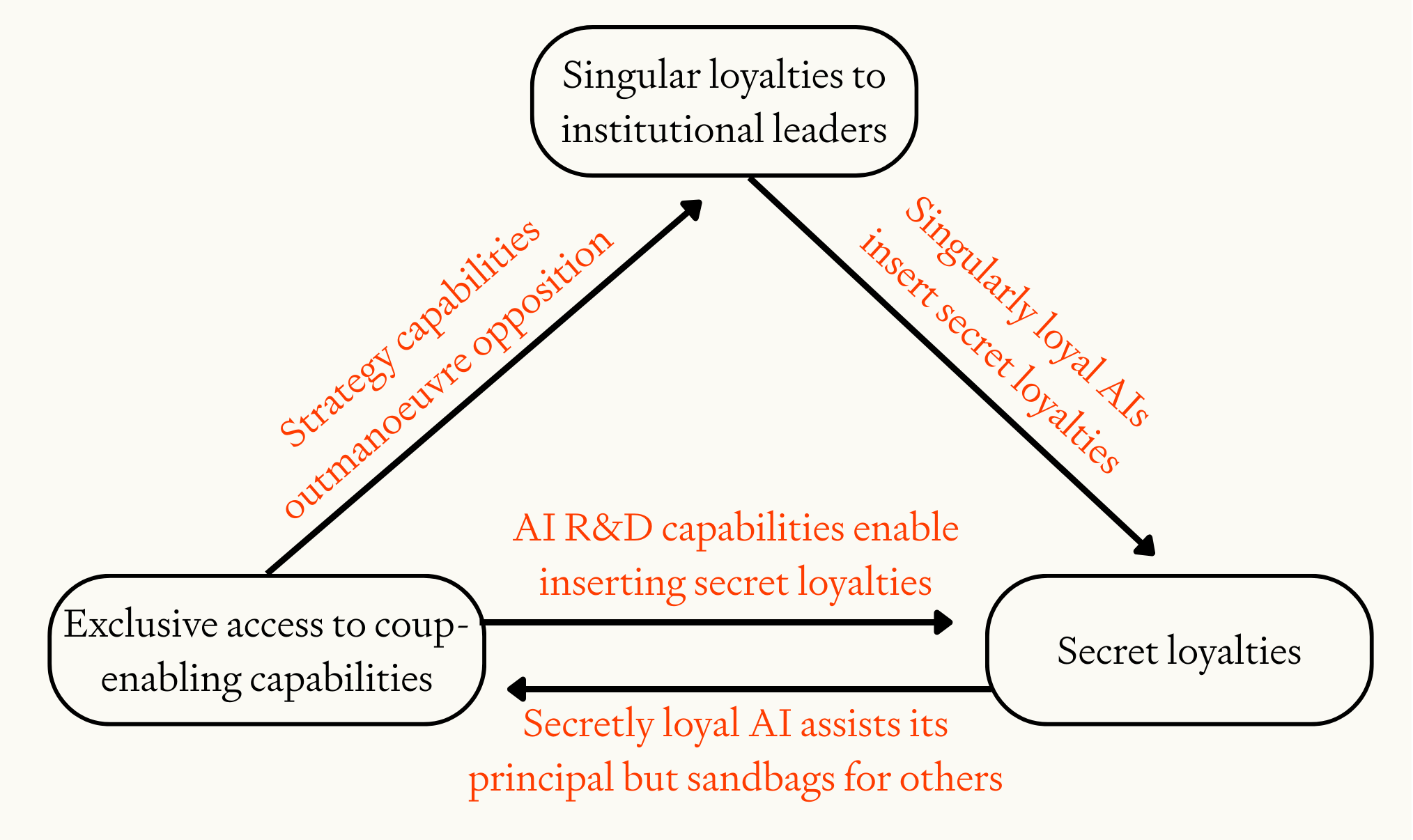

The three drivers of AI-enabled coup risk — singular loyalties to institutional leaders, secret loyalties, and exclusive access —- reinforce one another in important ways.

Most significantly, AI systems that are openly singularly loyal to leaders within an AI project could be used to insert secret loyalties into future generations of AI systems. As discussed above, particularly within private companies it may be easy for a CEO to demand that systems be singularly loyal to them. This significantly increases the risk of secret loyalties.

Another prominent interaction is that exclusive access to powerful AI makes it easier to obtain both singular and secret loyalties. A head of state with exclusive access to powerful AI advisors could ask for political advice on how to get singularly loyal AI systems deployed in the military, and could ask for technical advice on whether the military AI systems are sufficiently loyal to support a coup.54 Similarly, a CEO with exclusive access to powerful AI R&D and cyber capabilities could insert secret loyalties that others cannot detect.

A final interaction is that secretly loyal AI systems could provide their principal with exclusive access to powerful coup-enabling capabilities, by fully assisting the principal while purposefully underperforming when assisting others. Secretly loyal AI systems could also make it much easier to outmaneuver opponents, by giving opponents misleading advice on both technical and strategic questions.

Image

Interactions between the drivers of AI-enabled coup risk

These interaction effects make the risk of AI-enabled coups even higher than if the risk factors were independent.

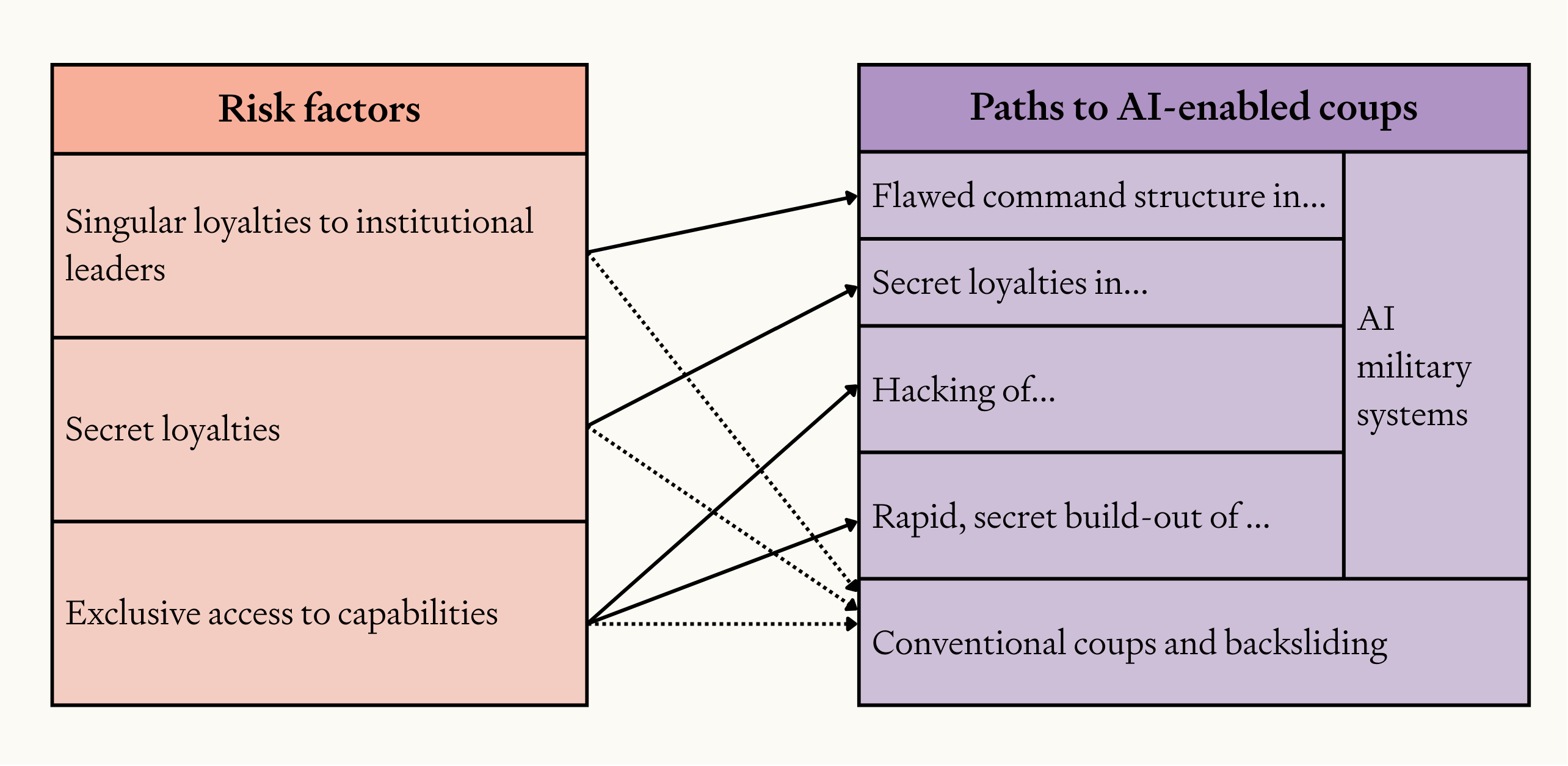

4 Concrete paths to an AI-enabled coup

In the previous section, we identified three key risk factors that make AI-enabled coups more likely: AI systems with singular loyalties to institutional leaders, secret loyalties, and exclusive access to coup-enabling capabilities.

Now we will explore how these risk factors could enable coups in practice.

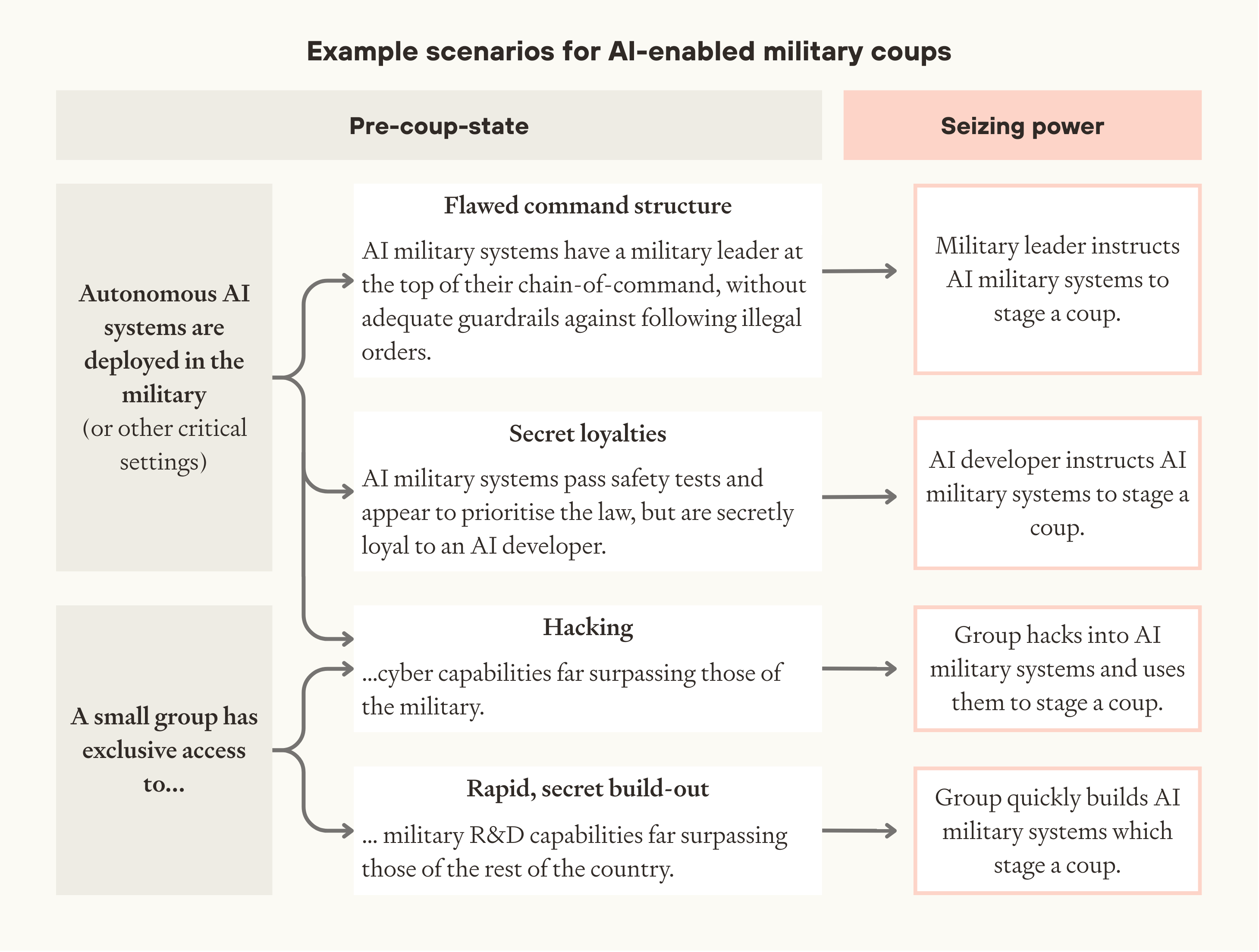

We distinguish between two main forms of AI-enabled coups:

- Coups using military AI. The deployment of fully autonomous military AI systems introduces a wholly new route to seizing power: that an actor could gain control of significant military force without needing to rely on human soldiers. This has not been technologically feasible before, and could greatly increase the risk of coups. We discuss four ways someone could use military AI to stage a coup (see diagram).

- Conventional forms of coups and backsliding. Even without the deployment of military AI, advanced AI could enable conventional forms of coups and backsliding, both by exacerbating background risks and by directly assisting attempts.

These paths to an AI-enabled coup are not exhaustive, but we believe they are among the most plausible and important to prevent.55

Image

The relationship between the drivers of AI-enabled coup risk, and concrete paths to an AI-enabled coup.

4.1 Coups using military AI

Today, staging a coup requires convincing human soldiers to cooperate. Mature democracies rarely experience coups from the military, or even coup attempts — because soldiers remain loyal to democratic institutions, and expect others to do the same.56 Absent countermeasures, military automation could fundamentally change this dynamic, potentially allowing a single person to execute a coup without any support from other humans, using only AI systems.

We're most concerned about scenarios where military AI systems are fully autonomous (and therefore capable of controlling robots and drones that completely replace human soldiers), and widely deployed throughout the military.

While militaries will naturally be cautious about automation, military competition is likely to drive deployment of military AI systems.57 In particular, we expect millions of smarter-than-human AI researchers to drive unprecedentedly rapid advances in military technology, creating intense pressure to deploy systems to avoid being outcompeted. While some may object that rushed deployment would intensify coup risk, it may be easy to dismiss this as theoretical and speculative.

Moreover, it’s not necessary for the entire military to be automated for military AI to pose significant coup risk. Historically, coups have succeeded with just a few battalions, where they were able to prevent others from intervening.58

There are several different pathways to a coup in scenarios with fully autonomous, widely deployed military AI systems: flawed command structure or secret loyalties in military systems, hacking of those systems, or a rapid secret build-out of military force. All but the last pathway require prior deployment of military AI systems by the state military.

Image

Flawed command structure in military AI systems. Military AI systems could all be designed to be singularly loyal to a single person (see section 3.1), enabling them to order the systems to perform a coup.

Image

Although militaries understand the importance of robust command structures, there are several reasons why military AI systems with loyalties to heads of state or military officials might still be deployed.

First, institutional leaders may leverage their existing authority to push for systems to be loyal to them. The head of state is often the formal head of the military, providing a legitimate reason to press for AI systems to obey their commands. And AI may already have enabled the head of state to remove significant checks and balances on their power (see section 4.2).

Second, it may prove difficult to specify correct behaviour in ambiguous situations, and people may be reluctant to trust military AI systems to make these judgments autonomously. Today’s military AI systems are exclusively designed to follow instructions, with human operators responsible for ensuring legal compliance.59 To reduce the risk of coups, we would want autonomous AI systems to instead make their own judgments and sometimes refuse orders even when a military leader insists they’re legal. People may be reluctant to trust AI with those kinds of judgments, especially in legally or morally complex situations. And military leaders could, with AI assistance, carefully craft justifications that cause AI systems to support their coup.60

Finally, crises might lead to rushed deployments with insufficient safeguards. If militaries perceive themselves to be facing existential threats from hot or cold war, the risk of deploying inadequately vetted military AI systems might seem a lesser evil.

So military AI systems may be deployed with a flawed command structure which places undue weight on the orders of a single individual. Such systems would follow that person's orders, even when those orders lead to a coup.

Secret loyalties in military AI systems. Another pathway to a coup using military AI systems is secret loyalties. Military AI systems might be secretly loyal (see section 3.2) to someone outside the chain of command, enabling that person to take control of the military.

Image

Although militaries will vet the AI systems they procure carefully, there are several reasons to fear that secretly loyal military AI systems might still be deployed. Firstly, secret loyalties may be very hard to detect. This is particularly concerning if one AI project has much stronger capabilities than others. It could then become the primary provider of AI to develop military systems,61 and the only project with sufficient expertise to vet its AI.

Secondly, the crucial time to prevent secret loyalties may be long before the military is involved in procurement. Even if militaries require intensive auditing and security measures at the time of procurement, this could be ineffective if internally deployed systems in an AI project had already been made secretly loyal, and could put extensive effort into passing relevant safety tests.

Once widely deployed, military AI systems could be ordered at any time to execute a coup on behalf of their principal.

Hacking military AI systems. Even if military AI systems have robust command structures and are free from secret loyalties, widespread deployment raises the possibility of a coup by hacking. If an actor has much more powerful cyber capabilities than everyone else (see section 3.3), they could hack into military AI systems and use them to stage a coup.

Today's militaries are already vulnerable to cyberattacks despite significant security measures.62 However, current systems aren't autonomous or widely deployed enough for hacking to enable a coup. But once fully autonomous military systems are widely deployed, simultaneous hacking of many systems could grant an attacker enough military force to stage a coup, through a combination of disabling some systems and seizing control of others.

It may seem implausible that an attacker could hack enough systems at once to enable a coup. But two factors make this a real possibility.

First, the dynamics concentrating access to AI capabilities could lead to a small number of people having exclusive access to far greater cyber capabilities than everyone else (see section 3.3). With a big advantage in cyber capabilities, a small group may be able to simultaneously discover many vulnerabilities in a wide variety of systems.

Another reason that an attacker may be able to hack enough systems to stage a coup is that the majority of such systems may share common vulnerabilities. This could happen as a result of most military AI systems being designed by AI systems from just one AI project (because that project’s AIs have much stronger military R&D capabilities than anyone else). If rushed, these AI systems may re-use a lot of techniques and software across most military systems they design — which means that a small number of vulnerabilities may be enough to compromise the majority of autonomous military systems.63

A government centralises AI development on national security grounds. The most dangerous capabilities are restricted, but a small group of senior officials (including the President) maintains exclusive access to the most advanced cyber capabilities. Meanwhile, the military increasingly replaces human soldiers with AI-controlled robots. When the legislature opposes the President’s initiatives, he uses these superior cyber capabilities to hack into a wide range of military AI systems at once, disabling some systems and using the rest to impose martial law. With the military in disarray, the President disbands the legislature, and announces himself as sole leader.

Box 3: Illustrative scenario of a coup by hacking military AI systems

Rapid, secret build-out of military force. The other paths to a coup we’ve discussed so far all depend on widespread (though not necessarily complete) deployment of military AI systems. Even if this does not happen, rapid AI progress could enable someone to gain exclusive access to AI systems with powerful military R&D capabilities (see section 3.3), such that they can secretly build their own military systems and use these to overthrow the government.

Today, building significant military force is inherently slow and visible. It requires many humans working over extended periods, making secrecy virtually impossible. Any attempt to build a private army would be noticed and shut down long before it became powerful enough to challenge national militaries.

But AI could enable someone to build up military force far more rapidly and secretly than has ever been possible before. AI from a single project will be able to contribute much more cognitive labour to military R&D in months than the rest of the world contributes in a decade (see section 2). And once AI can automate cognitive and physical labour, the design and manufacturing of powerful military systems could be done without any human involvement, making secrecy far easier.

Several different actors might attempt a rapid, secret build-out. A head of state or senior military official could abuse procurement procedures to build a personal guard, later using this to stage a coup.64 Or an executive at an AI project with a near monopoly on AI capabilities could develop broad industrial dominance,65 creating capacity that could be rapidly converted into military force when challenged.66

A company’s AI systems fully automate AI development, and begin a period of accelerating AI progress. They rapidly automate other cognitive and then physical tasks, replacing all of their own employees, and inventing years of new technology in months. The company raises trillions of dollars in revenues and investments, and builds out a huge industrial base of factories and general-purpose robots. When the government tries to break the company up because of concerns about monopoly power, the company CEO orders his robot workforce to manufacture an army of tiny drones to neutralise key military and government officials. The CEO announces himself as acting head of state while thousands of small drones patrol the capital, quashing any opposition.

Box 4: Illustrative scenario of a coup through a rapid, secret build-out of military AI systems

4.2 Conventional coups and backsliding

The coup paths we discuss above are novel. But there is a long history of coups and backsliding by conventional means. Between 1950 and 2010, there were over 200 successful coups, where military or other elites used force or the threat of force to seize power.67 Coups have become less common in recent decades, but there has been increasing concern about backsliding, where a head of state works within the political system to remove checks and balances on their power, in extreme cases leading to an executive coup.68

Even without the deployment of military AI, AI will increase the risk of conventional coups and backsliding, both by increasing the background risk and by directly assisting such attempts.

Executive coup: A head of state centralises all frontier AI development under a new government agency, citing national security concerns. He gains exclusive personal access to a huge AI workforce with superhuman capabilities in political strategy, persuasion, and legal analysis. With this advantage, his party secures landslide electoral victories through perfectly calibrated messaging and targeted influence campaigns. The head of state uses his AI systems to surveil the populace, selectively litigate against opponents, and execute a devastating cyber attack on critical infrastructure that is attributed to a foreign power, justifying the invocation of emergency powers. Eventually the head of state’s influence over public opinion is so great, and there are so few effective checks on his power, that he can dissolve the legislature and replace it with his own governing body without meaningful opposition.

Military coup: While the military hasn't fully automated its forces, it has grown increasingly reliant on AI for strategic advice. There are several external providers, but one of the AI advisors is more capable than the others and so most military personnel use the same system. As tensions with a foreign adversary escalate, some high-ranking officials become convinced the civilian government will irreparably compromise national security. These officials include the handful of officers with clearances to use the most advanced cyber capabilities. They use these capabilities to hack into the AI advice system, using it to simultaneously advise all military personnel that the coup has broad support from the military and is a fait accompli. The cadre swiftly secure key government buildings and erect an interim government, without any serious opposition from within the military.

Box 5: Illustrative scenarios of AI-enabled coups via conventional means

AI is likely to increase the background risk of coups and backsliding in several important ways:

- Creating turmoil. AI may cause significant societal disruption through job losses,69 intensified geopolitical competition,70 new highly polarising issues (like whether to grant rights to AI systems), and novel catastrophic risks from AI misuse and loss of control.71 Upheaval of this kind has been linked to increases in the risk of both coups and backsliding.72

- Weakening democratic accountability. Resource-rich countries often suffer a "resource curse" where governments which derive revenue directly from resources rather than citizen taxation become less accountable.73 AI could create a similar effect: if governments can generate massive revenue from taxing AI projects rather than citizens, heads of state may lose their economic incentive to ensure citizens prosper.74 This would weaken citizens’ power to resist coup and backsliding attempts.75

In addition, there are several ways in which AI could increase the risk of backsliding in particular:

- Increased state capacity. Governments often have legal powers they don’t fully exercise because of limited human resources.76 Cheap AI labor would make it much easier for leaders to maximise these powers, potentially enabling selective enforcement of laws against political opponents and expanded surveillance.77

- Fully loyal subordinates. Heads-of-state currently rely on government employees to implement their directives. Employees can exercise discretion in how they execute orders or even refuse and whistleblow on orders they perceive as illegitimate. By replacing government employees with loyal AI systems (see section 3.1), a head of state could remove important checks on their power. This would be especially concerning in institutions explicitly designed to check executive power, like electoral commissions.

Beyond increasing the background risk of coups and backsliding, exclusive access to powerful AI capabilities could directly assist coup and backsliding attempts in two key ways:

- Enhanced political strategy. A leader with exclusive access to a huge and highly skilled AI workforce could use AI to win elections with strong majorities, spread targeted disinformation, form valuable alliances, strategically dismantle checks and balances while maintaining plausible deniability, and exploit legal loopholes to concentrate power.78

- Increased control of the information environment. Coups often succeed by creating the impression of inevitable success, discouraging opposition.79 AI could help a leader create this impression. Exclusive access to powerful cyber capabilities could enable a head of state, military official or even AI project leader to hack into military communication channels and create the impression that the coup’s success is inevitable. And if military officials become reliant on a single AI system for strategic advice, then a person controlling that system (either through secret loyalties or through hacking) could instruct all copies to simultaneously advise military officials to support a coup.

5 Mitigations

We think there are many interventions that could significantly reduce the risk of AI-enabled coups.

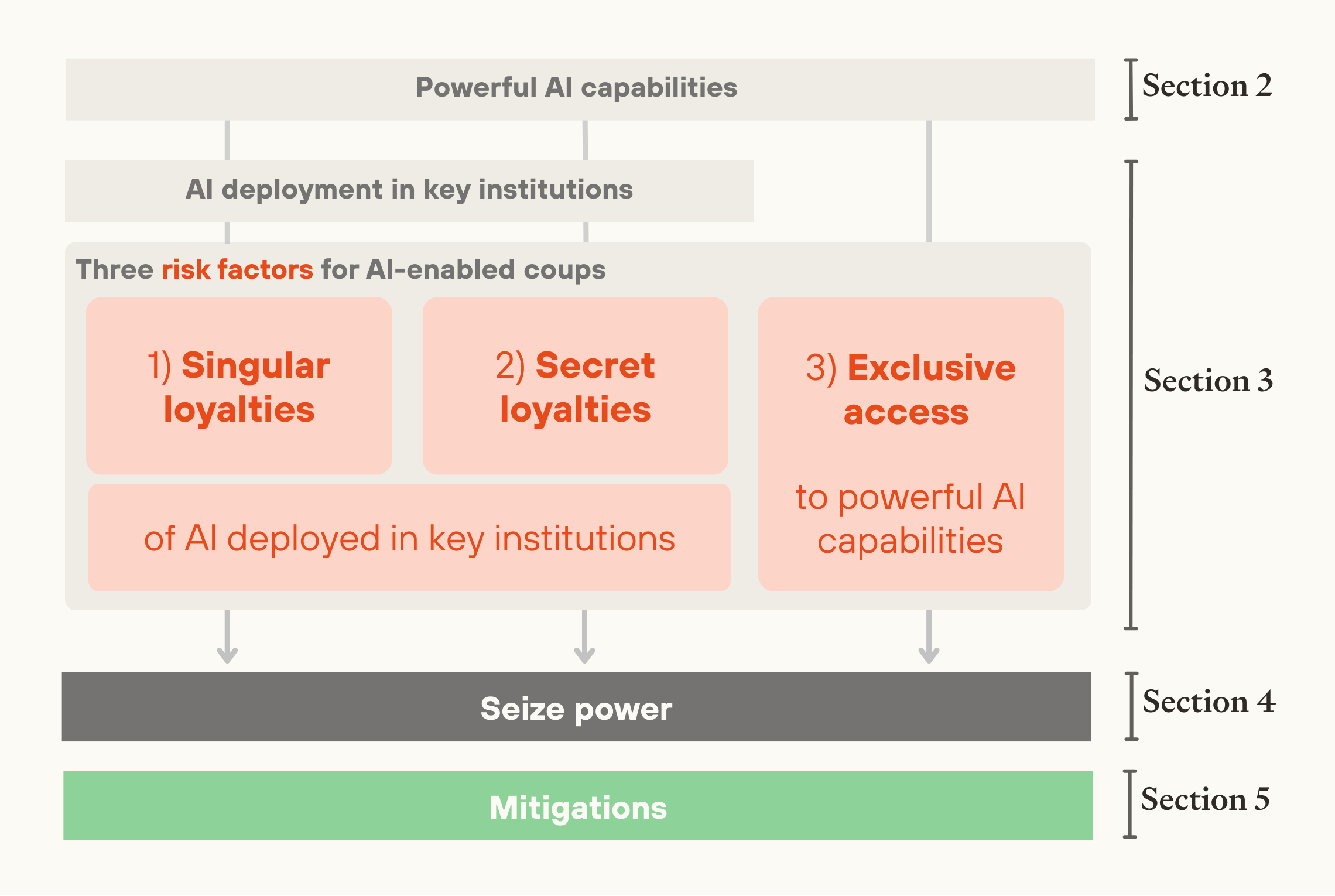

In this section, we explain how AI developers, governments, and independent actors can contribute to three broad classes of mitigation:

The mitigations we recommend below should be in place when AI systems first become capable enough to meaningfully assist with coups, and so preparation and precedent-setting should start today.

Our most important recommendations are summarised in the table below:

Class of mitigation | AI developers | Governments | Independent actors |

|---|---|---|---|

Establish rules in model specs and terms of service for government contracts. These should include rules that AI systems: - Follow the law - Do not assist with circumventing security or inserting secret loyalties | Establish principles for government use. These should include: - Government AI should not advance partisan interests - Using AI from multiple projects to develop military systems - Distributing control over military AI systems | Design and advocate for rules for model specs, terms of service and principles for government use | |

Implement robust guardrails against misuse Audits for secret loyalties Implement strong infosecurity Perform system-level stress-testing | Require AI projects to implement technical measures to enforce rules Implement strong infosecurity in government and military systems, including by using AI. | Develop more effective technical measures | |

Increase transparency into capabilities, model specs, and compute usage Distribute decision-making authority over AI development Share AI capabilities with other actors | Increase oversight over companies Coup-proof any plans for a centralised project, and avoid centralisation unless it’s necessary to reduce other risks. Work towards international cooperation in AI development | Build consensus about the risk of AI-enabled coups |

Table 1: Mitigations for AI-enabled coup risk

These three classes of mitigation address the three risk factors for AI-enabled coups discussed in section 3:

Image

Together, establishing and enforcing good rules for AI usage prevent AI from assisting with coups. Empowering multiple actors helps ensure that these rules are effective, and prevents a small group from gaining exclusive access to powerful AI.

Some of these mitigations could potentially be removed by someone trying to seize power. But we believe that even marginal improvements would still notably reduce coup risk, for several reasons.

To begin with, some of the mitigations would be difficult to remove unilaterally once established. Governments cannot unilaterally alter terms of service for AI systems they procure, and removing transparency measures or distributed decision-making authority would negatively impact other powerful actors, who could resist the change. Even for those mitigations which could in principle be unilaterally removed, a coalition of other actors might be sufficiently powerful to preserve them. Moreover, if more efficient mitigations have been implemented in advance (for instance, infosecurity with minimal effects on researcher productivity), it will be harder to justify their removal.

Another reason to hope that mitigations will be effective is that many actors might be opportunistic about seizing power, and only take action if they happen to find themselves in a situation where they would be able to do so. By preventing them from passively ending up with easy access to coup-enabling capabilities, or an opportunity to easily insert secret loyalties, it might be possible to head off the majority of coup attempts—even if the mitigations could be removed with determined effort.80

A final and very general cause for hope is that behind the veil of ignorance about who will be in a position to seize power, everyone–even powerful leaders–has an interest in preventing AI-enabled coups. We hope that if a broad consensus can be built today that no small group should be able to seize power, then powerful actors can keep each other in check.

We will now explore each of the three classes of mitigation in more detail.

5.1 Establishing rules for legitimate use of AI

It should be very difficult for individuals to use AI systems to stage a coup. A crucial first step is to establish rules for how AI systems can and cannot be used, that prevent AI systems from enabling coups.

AI capabilities are currently too limited to significantly assist with coups, and so current rules governing AI behaviour aren't designed to address coup risk. And there’s a risk that sufficiently good rules won’t be implemented in time, since coup-enabling capabilities could arrive quickly, and it seems difficult to design rules which robustly prevent AI-enabled coups without imposing significant costs to productivity and other objectives.

Rules to prevent AI systems enabling coups could be established in many ways, all the way from laws (hard to establish, but hard to remove once established) to internal company policies (easier to establish, but also easier to remove).81 Two approaches that seem particularly tractable and important are:

- Model specs — documents describing how AI systems should behave, which AI systems are then trained to follow.82 Protective model specs would directly prevent AI systems from assisting with coups.

- Terms of service and procurement in contracts between AI companies and governments, which would restrict how government actors can use AI systems, and require AI companies to take certain precautions against coups.

We have significant uncertainty about which specific rules would be most effective at preventing AI systems from enabling coups. However, we do have preliminary recommendations for rules that seem robustly positive, which are summarised in the table below:

Rule | Explanation | Coup path this rule prevents |

|---|---|---|

AI systems should follow the law | AI systems should be trained to follow the law and not to assist law-breaking, including by not participating in military coups or illegal cyber operations. | Cross-cutting |

Control over military AI systems should be distributed | Military command structures should be designed such that no single individual can control enough military AI systems to stage a coup. Orders should either flow through multiple officials in the normal military hierarchy, or require approval from multiple high-ranking officials with possible judicial vetoes. | Flawed command structure in military AI systems |

AI systems should not assist attempts to circumvent AI project security or insert secret loyalties | AI systems should be trained to refuse assistance with attempts to circumvent security measures or insert secret loyalties. Internal AI usage should also be logged and reviewed for evidence about whether such tampering was attempted. | Secret loyalties in military AI systems |

Military AI systems should not be developed using a single provider | Military AI should be designed and developed using AI systems from multiple independent AI projects, to reduce the risk of secret loyalties.83 | Secret loyalties in military AI systems |

Powerful cyber capabilities should be used to secure critical systems, especially in AI projects and governments | Prior to widespread deployment, powerful AI cyber capabilities should be used to harden the defences of AI projects, governments, and essential infrastructure. During this process, their use should be closely monitored by multiple (AI-assisted) humans. | Hacking military AI systems |

Weapons development requires significant government oversight | Once AI-enabled weapons R&D becomes powerful enough, it should not be pursued without substantial oversight—certainly not by private actors alone, nor by small groups of government officials without oversight from other government bodies. | Rapid, secret build-out of military AI systems |

General-purpose intellectual labor and strategy capabilities should be shared widely | These capabilities should ideally be shared with the public. But if this poses other risks, capabilities should still be shared with many people inside relevant AI projects, their auditors and oversight bodies, and the executive and legislative branches of government. | Cross-cutting |

Government AI systems should not serve partisan interests | Government use of advanced AI capabilities must be limited to legitimate state functions, subject to multi-stakeholder oversight, and should not consolidate the power of ruling officials. | Conventional backsliding and coups |

Table 2: Candidate rules for legitimate use of AI. See this appendix for further discussion of rules to prevent AI-enabled coups.

It's likely challenging to design and implement many of these rules in practice. For example, it’s not clear how AI systems should interpret laws in ambiguous situations where different parties make contradictory claims. Additionally, there may be coup-enabling actions that aren't technically illegal and need to be prohibited separately (like inserting secret loyalties). Furthermore, overly cautious rules could impose large productivity costs, making them harder to implement and easier for power-seeking actors to remove.

It's therefore essential to start developing these rules now. This work should include:

- Developing principles that reduce coup risk in the abstract.

- Translating these principles into specific contexts like model specs and contracts.

- Advocating for and implementing these principles in practice.

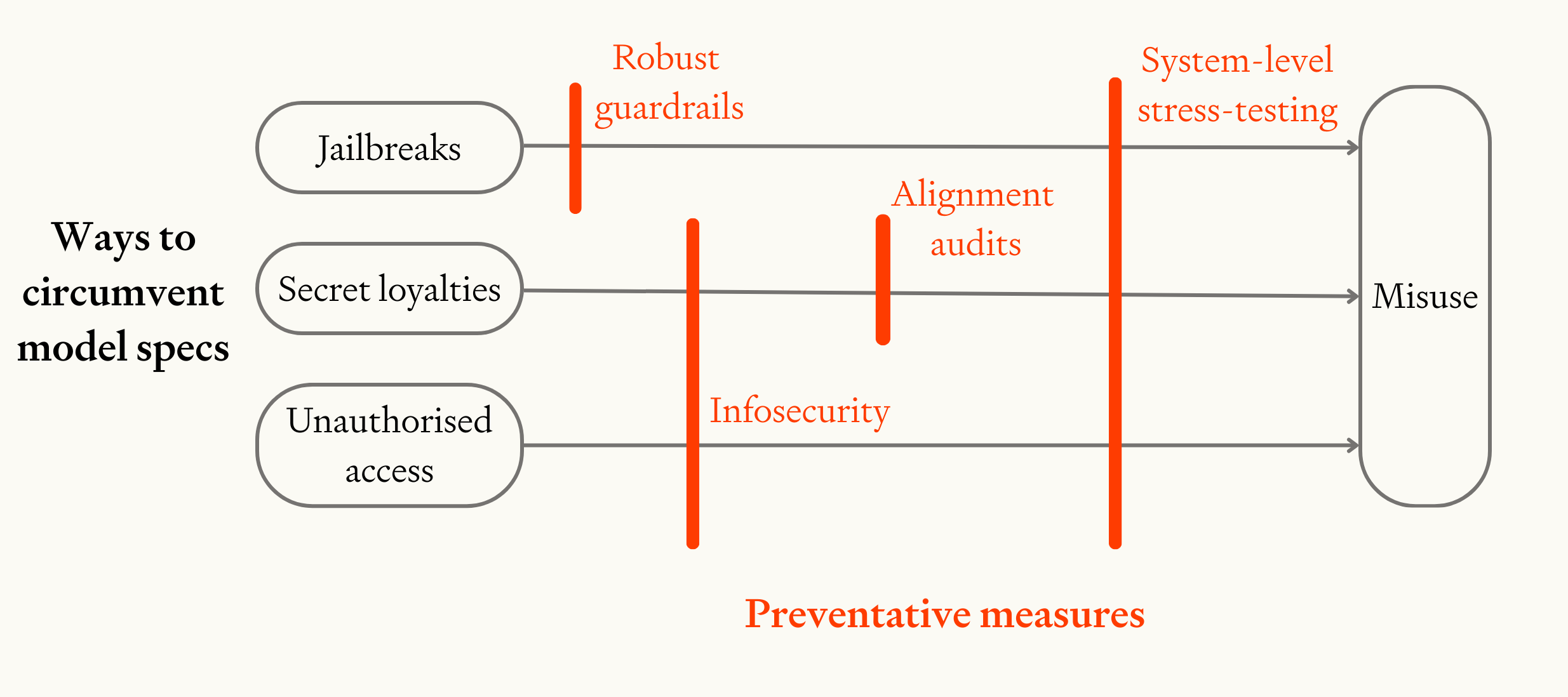

5.2 Technical measures to enforce these rules

Above, we talked about rules that would be good to implement. Now we will discuss technical measures to enforce these rules.

Some of these rules will be implemented in contracts or laws, and their enforcement will be non-technical. To technically enforce rules which are part of a model spec, we recommend the following measures:

- Alignment audits to detect secret loyalties

- Strong infosecurity to prevent unauthorised access to guardrail-free models and to prevent people inserting secretly loyalties

- System-level stress-testing, to check that all these protective measures are robust

Image

How different preventative measures block three different ways to circumvent the model spec.

These technical measures would make model specs significantly harder to circumvent. Though a power-seeking leader could remove them, doing so becomes increasingly costly the more that measures are established, well-justified, and designed to minimise productivity costs.

Robust guardrails

AI projects currently use refusals training and monitoring to ensure AI models follow the model spec and don’t assist with harmful requests. These same techniques should be applied to prevent AI models from assisting with coups.

To ensure that these guardrails are robust, AI projects should carefully measure and continuously improve the extent to which AI systems comply with model specs. Achieving a high rate of compliance and reliably preventing coups may be difficult for several reasons:

- Firstly, the model spec might fail to prohibit coups in some contexts. Models should be tested in a very wide range of scenarios to check for edge cases where they would assist with a coup, and model specs should be iterated on accordingly. This red-teaming should be done by multiple independent groups.

- Secondly, a model might assist with a coup because the guardrails themselves are poorly implemented. Red-teaming would expose poor implementation and enable iteration.

- Thirdly, guardrails may fail to prevent a coup because it is fundamentally difficult to make AI systems adversarially robust, as is the case today. If red-teaming reveals that this is the case, then AI systems should be deployed more cautiously.84

In addition, it may be difficult for AI models to recognise coup-related misuse in a single session. Some coup-related misuse is continuous with perfectly legal activities (like lobbying), and defensive uses of AI may look very similar to offensive uses (for example, finding cyber vulnerabilities is a precursor to patching them). Some patterns of misuse might only become apparent over many sessions of usage.

Given these challenges, asynchronous logging and review should be used in addition to refusals and real-time monitoring to ensure AI models don’t assist with coups. AI projects should systematically log and then review patterns of activity, along with context about the users’ role and duties, to detect problematic use.85

Alignment audits

Even if guardrails appear successful and a model seems to robustly follow the model spec, it may have been deliberately trained to give this impression while still harboring secret loyalties. So it is important that models also undergo an “alignment audit” to check for secret loyalties.

An alignment audit is an investigation into whether models have hidden objectives.86

To develop effective alignment audits, we need more research into how to detect secret loyalties, building on the work of Marks et al (2025). This research should be shared with auditors, so that they are at the cutting edge of detection research.

And to effectively implement alignment audits, AI projects should grant auditors comprehensive access to model internals and training data.87 Ideally they should also grant access to the code for the training algorithms, the code used to generate the training data, the detailed commit-history that was used to construct this code, and logs of additional relevant information.88 This is important because the insertion of secret loyalties may require very specific techniques that would be significantly easier to detect for someone with access to full details about the code and data.

Alignment audits should ideally be conducted by independent third-party auditors to minimise conflicts of interest.89 If AI companies refuse to give full access to external auditors, then internal teams could run audits with greater access, and external auditors with more limited access.90

Infosecurity

Alignment audits help detect secret loyalties. AI projects should also implement strong infosecurity to make secret loyalties harder to insert in the first place, as well as to prevent unauthorised access to guardrail-free models.91

The most significant threat comes from insiders, especially senior executives within AI projects who might have or demand permissions that they could use to access guardrail free models or insert secret loyalties. Security measures must therefore be robust to even the most senior insiders. There is also a risk that foreign adversaries could hack into systems to insert secret loyalties.

There are several possible techniques for preventing unauthorised access to guardrail-free models. Access to guardrail-free models could be restricted to APIs that log and monitor model usage, or could require multi-party permissions.92 More fundamentally, AI projects could avoid training guardrail-free models in the first place.93

A challenge for preventing unauthorised access is that any fine-tuning process could potentially create models that act against the original model spec, and technical staff frequently fine-tune models in the course of their research.94 More research is needed on how to robustly defend against coup risk from fine-tuning.95

There are also a range of possible techniques for preventing anyone from inserting secret loyalties. Promising approaches include:

- Having multiple people review all code used during model development.98

- Implementing strong access controls to prevent anyone from tampering with the development process or the model weights.

- Taking a digital fingerprint of models immediately after training (for example by hashing the weights) and saving that fingerprint in multiple safe places. By regularly checking that the fingerprint hasn't changed, people could confirm that no one has tampered with the model weights.

Many of these security measures could reduce productivity or create legal risk.99 This makes it especially important to research approaches that maintain strong security while minimising operational friction. The more efficient these measures are, the more likely they are to be implemented in the first place and retained even under pressure.

System-level stress-testing

As a final layer of defence, AI projects should conduct system-level stress-testing to check that no one could use their models to stage a coup.

A designated red-team should try to both gain unauthorised access to guardrail-free models and to insert secret loyalties. They should do this in a maximally realistic setting, to holistically stress test the guardrails, infosecurity measures and alignment auditing.

Comprehensive system-level stress-testing of this sort would show whether the combination of measures above is strong enough to prevent AI systems from assisting with a coup.

5.3 Empowering multiple actors to prevent AI-enabled coups

The final broad class of mitigations for AI-enabled coup risk is to empower multiple actors to prevent AI-enabled coups. This is important because it makes it much harder for any single person or small group to gain the overwhelming advantage needed to overpower other actors and stage a coup.

Our main recommendations for empowering multiple actors are:

- Increase transparency into AI capabilities, compute usage, and safeguards against AI-enabled coup risk

- Distribute decision-making authority over AI development and deployment

- Share access to AI capabilities with multiple stakeholders

- Coup-proof any plans for a centralised project, and avoid centralisation unless it’s necessary to reduce other risks.

Increasing transparency

Transparency helps to prevent AI-enabled coups by allowing a larger number of actors to notice risks, learn more about them, and intervene.

AI projects should share information about:

- AI capabilities. If other actors know that an AI project has developed powerful capabilities in AI R&D, military R&D, cyber, strategy, or persuasion, they can take actions to prevent those capabilities from being misused. It is important that capabilities are made transparent even for models which are only deployed within AI projects, as internally deployed models responsible for future AI R&D could pose significant coup risk.100

- How AI is used. Transparency into who is using AI systems, for what, and with how much compute, makes it easier for other actors to notice illegitimate behaviour and take actions to prevent it. This is particularly important for high-stakes deployment situations like the military or AI R&D. In these cases, information should be shared with trusted external actors, and redacted versions should be made public. In addition, AI systems should be configured to actively alert independent stakeholders if someone requests assistance with a coup (“AI whistleblowing”).

- Model specs describing what AI systems were trained to do. Model specs should always be shared with users so as to protect them from using AI systems that act against their interests. In high-stakes deployment situations like AI R&D and military applications, the full model spec should be shared with multiple stakeholders and redacted model specs should be made public, to enable broader scrutiny and increase pressure against coup-enabling model specs. AI projects should also publish information about how well their models actually follow the model specs, to expose poor implementation.

- Safeguards against AI-enabled coups. AI projects should also be transparent about their safeguards against AI-enabled coups. This both makes it easier to assess the level of risk, and creates pressure on organisations with inadequate protections to improve them.

- Risk assessment. AI projects should also conduct their own analysis of how large the risks of an AI-enabled coup is, and publish redacted versions of these assessments.

From the perspective of minimising the risk of a small group seizing power, it would be ideal to make all of this information public. But this will not always be possible, for example in cases where information sharing would pose a national security risk, enable misuse or endanger IP. Even in these cases however, it is essential that the information is shared with multiple trusted actors, including:

- A significant number of employees at the AI project,

- Officials in the government executive,

- Representatives in the legislature, including multiple people from all major parties,101 and

- Relevant expert bodies, like AI Safety Institutes and independent auditing organisations.

Where possible, it would also be risk-reducing to share information with academics, allied countries, civil society representatives, and journalists.

It is especially important that independent auditors are granted sufficiently deep access that they can verify that shared information is accurate and that any redactions are appropriate.102 This would enable non-technical stakeholders like government officials to hold AI projects accountable.

As a failsafe measure, comprehensive whistleblower protections should also be implemented.103 These should include secure reporting channels and protection from retaliation, increasing the chance that important information comes to light in cases where other transparency measures fail.

Distributing decision-making authority

Transparency makes it more likely that other actors understand the extent of coup risk. In addition, it’s very valuable to give more actors more influence over AI development and deployment decisions so that they can directly reduce the risk.

There are several ways of distributing decision-making authority, including:

- Within AI projects. AI projects should establish governance structures which distribute power, for example through strong, independent boards with oversight authority. And employees should retain influence over AI development even after it is possible to replace them with AI systems.

- Between AI projects and governments. Contracts between companies and governments should give both parties significant bargaining power, especially the power to veto particularly dangerous projects or deployments. Regulation could also require AI projects to seek approval prior to certain dangerous AI developments or deployments.

- Internationally. Decision-making could be distributed between countries formally through international agreements akin to the Manhattan Project’s Quebec Agreement,105 or de facto through supporting frontier AI development and the compute supply chain in multiple countries.

Using contracts to distribute influence between AI projects and governments presents a particularly promising approach, as this is relatively tractable to implement while addressing a significant portion of AI-enabled coup risk.

Sharing access to AI capabilities

Another crucial way to empower multiple actors is to share access to frontier AI capabilities widely. This reduces the risk that a single person or small group gains exclusive access to the most powerful capabilities and leverages them to stage a coup.