Appendices to AI Tools for Existential Security

Released on 14th March 2025

Citations

This page contains appendices for the piece AI Tools for Existential Security.

Appendix 1: On whether accelerating applications could be bad via speeding up AI progress in general

What about the concern that any acceleration of an AI application might shorten timelines to transformative AI, and thereby reduce the odds of successfully navigating it? (Various people have different views on how seriously to take this concern — lack of consensus on this point means that it may be difficult to give a single answer that will be satisfying to all readers.)

In brief:

- Some applications, like automated negotiation tools, seem to be peripheral to core AI progress

- So the only impact they might have on timelines would be via:

- Contributing to general hype and increasing investment (shortening timelines)

- Pulling talented people onto them and away from other areas of AI (lengthening timelines)

- In these cases, the net effect on timelines is likely to be very small, and so for well-selected applications for having good benefits from accelerating, it is very likely that the bigger effects would not be via their effects on timelines

- Other applications might, if successful, have nontrivial impacts on AI timelines (e.g. automation of research in one area may have spillover effects that help automation of research in another area)

- In these cases, in considering whether to accelerate these applications, the ultimate comparison will be between:

- (i) the expected benefit from having access to this application earlier on

- (ii) the expected amount that background variables would be in better shape as a result of the delay in overall AI timelines that comes from not pursuing the application

- If the effect (of having a better version of the application available) on AI timelines is negative, it should be weighed as a cost.

- Note: For any particular view on the undesirability of bringing forward AI timelines, there will likely be some circumstances in which the benefits of differential application development outweigh the costs

- Moreover these may change over time — as AI applications drive larger slices of what is important in the world, the relative costs of forgoing the benefits of pursuing AI applications will rise

Appendix 2: On dynamics that make it harder to meaningfully counterfactually accelerate an application (and when meaningful acceleration still looks achievable)

As a general rule, it’ll be hard to achieve very strong and long-lasting boosts in the trajectory of an application. Market forces or improving technology might swamp our investments, and the paradigms we’re relying on might evolve quickly enough to make our work futile. But investing significant resources towards accelerating some useful application might still be valuable enough if:

- 1) The counterfactual acceleration arrives at a crucial time

- Even if the acceleration washes out quickly, it may be vital if it boosts a key capability we need to better navigate a major challenge

- 2) The counterfactual acceleration is reasonably persistent

- An initial acceleration may compound, helping the capability advance more in the future; while this won’t be perpetual, it could widen the window for the intervention to have a long-lasting impact via one of the other routes

Still, both of these “impact cases” rely on believing that we can achieve at least some non-trivial acceleration, and “futility dynamics” may shrink the counterfactual acceleration that we can achieve in different cases, so it’s worth discussing these concerns.

Swamping & substitution effects (via market forces and improving technology)

Three broad dynamics might make us concerned that our opportunity to make a real difference is limited.

- There might be little scope to accelerate most applications initially, as market forces fill many of the best opportunities

- Market forces might compensate for goal-driven investment (weakening compounding effects); if we invest in accelerating some application, we might just be displacing equivalent work that would have been incentivized by the market, so the market will just return the trajectory to equilibrium unless we continue pushing it up

- Automation might make our investments trivially easy to replicate at a later point when costs have fallen

These dynamics might diminish the possible counterfactual impact, but shouldn’t be viewed as deal-breakers:

- They might only have an effect slowly (e.g. perhaps the market compensates eventually, but takes a year or two to adjust)

- And we might be able to target areas where these dynamics are significantly weaker:

- We can invest in applications that are poorly incentivized by the market

- We can invest in areas or interventions that are likely to be automated more slowly, like work that involves human input or experiments on biological time-scales

- And we can leverage automation or market forces by demonstrating the value of some applications, shifting the resulting incentives

Image

Irrelevance of our work (via nearsightedness or rapidly evolving foundations)

We might also worry that our work is just irrelevant by the time it could start producing results. AI systems are improving and changing fairly rapidly. Some interventions we could invest in might quickly become irrelevant, either by no longer working with new systems or by becoming unnecessary. (For example, previous generations of automated translation tools were obsoleted by deep learning approaches.)

But interventions might still look highly promising if we properly account for this dynamic. For instance, this is a reason to aim for shorter-horizon accelerations (since predicting the near-future is easier, and the paradigms will probably shift less) or pursue more general interventions like curation of high quality datasets (which depend on more robust paradigms) over the development of model-specific scaffolding improvements.

Image

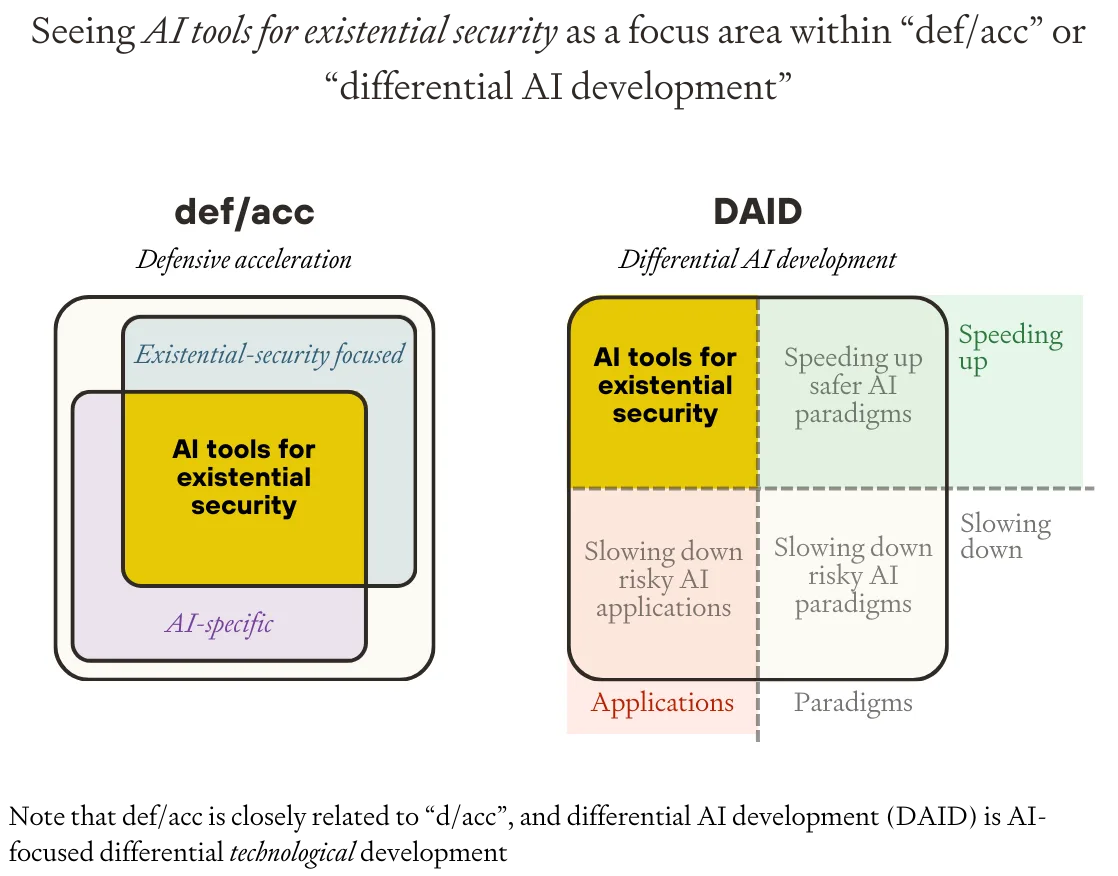

Appendix 3: How does this agenda relate to existing concepts, like differential technological development and def/acc?

Def/acc and differential technological development

Trying to accelerate some AI applications in the pursuit of existential security could be seen in terms of existing concepts. In particular, we might interpret it as:

- def/acc, specialized to AI (and more focused on existential security instead of broadly defending society)

- Differential technological development (DTD),1 specialized to accelerating (and not slowing down) safety-increasing AI applications

We can visualize the relationship between def/acc, “differential AI development” (AI-focused DTD), and our focus area:

Image

More on “Differential AI development” (DAID)

We could see this project as the side of “differential AI development” (DAID) — AI-specific differential technological development — that is not focused on shaping the underlying technology, and instead focuses specifically on accelerating beneficial applications of AI. We can call this “differential application development.”

Other sides of DAID also seem quite promising.

- Slowing down risk-increasing AI developments should be part of the portfolio, but we think it’s less promising, and less neglected than accelerating risk-reducing applications

- It might be worth giving more attention to accelerating safer paradigms of AI development.

Appendix 4: “Differential paradigm development” (a different side of DAID)

The “differential paradigm development” (DPD) side of DAID involves trying to help safer-seeming AI research paradigms succeed (e.g. preferring scaffolded LLM agents to end-to-end trained agents, for transparency reasons). It could also involve trying to shape other AI-related foundations — like the way key applications are structured and integrated with each other, or central institutions underlying AI development.

The line between differential application development and differential paradigm development is somewhat blurry. In some cases an application of one generation of technology becomes a paradigm that subsequent generations are built on. In other cases, the value of developing an application may be that it enables the boosting of a particular paradigm. But typically there will be a difference. Here we’ll quickly survey how we might think about DPD.

DPD may be high leverage because:

- There’s a natural opportunity for path-dependency — what people build in the future depends on the foundations available to build on.

- So the possibility for long-lasting change is substantial

- Some paradigms look much safer / more robust than others

- e.g. transparency properties vary substantially by paradigm

On the other hand, there are some reasons for skepticism about DPD:

- There may be some degree of technological inevitability about which paradigms win (although we don’t think inevitably is absolute)

- Dominant paradigms might often be developed by deep-pocketed actors, so it might be very expensive to seriously compete by pushing rival paradigms

- Perhaps the realistic interventions are more likely to be “make sure that promising early-stage paradigms get enough investment to have a serious shot” and not like “keep ploughing resources into things that would have taken off already if they were going to”

- Pushing forward certain AI paradigms might have a greater effect on overall AI progress than focusing on specific AI applications does

Which paradigms might we focus on?

As a general rule, one paradigm might seem safer than others if:

As a general rule, one paradigm might seem safer than others if:

- It is inherently safer or more robust

- e.g. RL selecting for behaviour seems more likely to introduce subversive goals than something which doesn’t exert direct selection pressure on top-level planning

- It facilitates certain safety techniques

- e.g. Scaffolded LLM agents naturally have a high level of transparency, which could help in various ways

- It facilitates some generally beneficial application

- e.g. Paradigms that permit better data provenance may make it easier to build applications helping social epistemics and truth-seeking by making it easier and more natural to trace the provenance of claims

The technological landscape may render some paradigms non-viable. It may be important to assess the feasibility of a paradigm so as not to waste effort on behalf of something that is ultimately a non-starter.

What can we do to differentially accelerate a paradigm?

- Just work on the preferred paradigm

- By accelerating it to something more advanced, and showing people how to do impressive things within the paradigm, it makes it more attractive for others to work within

- If there is some path dependency in what route civilization takes, this could precipitate a trajectory shift — the favoured paradigm builds momentum until more and more people use this rather than another

- Working on a paradigm could mean building out systems within that paradigm, or could mean developing metrics which help to support it

- e.g. benchmarks for honesty of AI systems may help facilitate the development of highly honest systems

- Social interventions to make the preferred paradigm more popular

- Among researchers

- Among non-researchers (perhaps getting people to ask for some feature that the paradigm provides especially easily)

- Changing the incentive landscape

- Subsidies for the preferred paradigm

- Taxes or increased regulatory burden for the dispreferred paradigm(s)

Appendix 5: Why we focus on accelerating risk-reducing applications rather than slowing down risky applications

Focusing on differential development could in principle mean speeding up the good things, or slowing down the bad things.

There are two big reasons why speeding things up often looks like the better plan:

- It’s more cooperative with the rest of the world

- It’s easier to implement

(Of course, these are related.)

Speeding things up is more cooperative

There are a lot of people in the world trying to do a lot of different things. Many are earnestly trying to accelerate all kinds of powerful technology, and there are often real costs to delaying those technologies. This means that working to slow things down is inherently somewhat adversarial — with all the downsides that entails2.

In contrast, pretty much everyone will be on board with speeding up beneficial applications (even if they have different priorities). This work could attract much broader coalitions, and build more momentum, without the same friction.

Speeding things up is easier

This might be surprising. Slowing things down seems in some sense simpler — the world just needs to not do anything! But technological progress is more unilateralist than consensus-based. If anyone makes the breakthrough, the breakthrough is made. And in a market economy where there are large financial prizes for success, it’s hard to seriously slow things down by local intervention — if you discourage certain investors or developers from pursuing an innovation, others may step in to fill in the gap.3 AI developers seem strongly incentivized to push forward.

In contrast, the unilateralist nature of things works to your advantage if you’re trying to speed things up. You don’t need to build a consensus that it would be important to do X — you can just go and do it.4

Footnotes

Released on 14th March 2025